EVO: Rail – Integrated Hardware and Software

VMware has announced it is entering the hyper-converged appliance market in conjunction with hardware partners for them to ship pre-built hardware appliances running VMware software. See my introduction, VMware Marvin comes alive as EVO:Rail, a Hyper-Converged Infrastructure Appliance.

Each of the four compute nodes within the 2U appliance has a very specific minimum set of specifications. Some hardware vendors may go above and beyond this by adding say GPU cards for VDI or more RAM per host but VMware wants a standard approach. These kinds of servers don’t exist on the market currently other than what other hyper-converged companies whitebox from say SuperMicro so we’re talking about new hardware from partners.

Each of the four compute nodes within the 2U appliance has a very specific minimum set of specifications. Some hardware vendors may go above and beyond this by adding say GPU cards for VDI or more RAM per host but VMware wants a standard approach. These kinds of servers don’t exist on the market currently other than what other hyper-converged companies whitebox from say SuperMicro so we’re talking about new hardware from partners.

Each of the four EVO: RAIL nodes within a single appliance will have at a minimum the following:

- Two Intel E5-2620v2 six-core CPUs

- 192GB of memory

- One SLC SATADOM or SAS HDD for the ESXi boot device

- Three SAS 10K RPM 1.2TB HDD for the VSAN datastore

- One 400GB MLC enterprise-grade SSD for read/write cache

- One VSAN certified pass-through disk controller

- Two 10GbE NIC ports (either 10GBase-T or SFP+ connections)

- One 1GbE IPMI port for out-of-band management

Each appliance is fully redundant with dual power supplies. As there are four ESXi hosts per appliance, you are covered for hardware failures or maintenance. The ESXi boot device and all HDDs and SSDs are all enterprise-grade. VSAN itself is resilient. EVO: RAIL Version 1.0 can scale out to four appliances giving you a total of 16 ESXi hosts, backed by a single vCenter and a single VSAN datastore.There is some new intelligence added which automatically scans the local network for new EVO:RAIL appliances when they have been connected and easily adds them to the EVO: RAIL cluster.

VM Density

Each EVO: RAIL appliance is sized to run approximately 100 average-sized, general-purpose, data center VMs. Obviously actual mileage will vary depending on your workloads. This general-purpose calculation is based on VMs with 2 vCPU, 4GB RAM, 60GB Disk. For VDI and Horizon View, each appliance allows up to 250 VMs with the following profile: 2vCPU, 2GB RAM, 32GB Disks with linked clones. I would think this memory size would be rather low though.

Software

The EVO: RAIL software bundle is pre-loaded onto the partner’s hardware and consists of:

- The EVO:RAIL Deployment, Configuration, and Management

- vSphere Enterprise Plus

- ESXi for compute

- VSAN for storage

- vCenter Server

- vCenter Log Insight

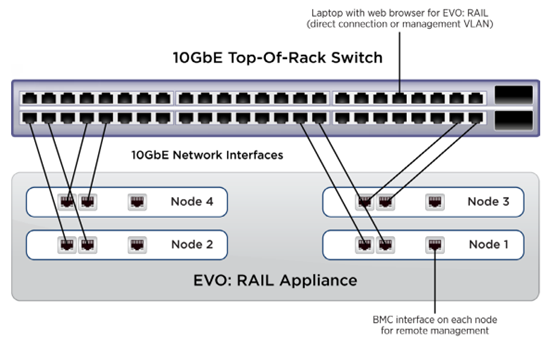

Network Connectivity

Each node in an EVO:RAIL 2U appliance has two 10GbE network ports which must be connected to a 10GbE top-of-rack switch that has both IPv4 and IPv6 multicast enabled. You don’t need to run IPv6 anywhere else on your network other than the EVO:RAIL ports but IPv6 and multicast is used to discover new appliances when you plug them in even when they don’t have an IP address yet. VMware also suggests if you may scale beyond one appliance to reserve 16 preferably contiguous IP addresses up front for the ESXi, vMotion, and VSAN IP pools to make configuring additional appliances even easier. Each node also has a 1GB IPM port for DRAC/iLO management that can connect to a management network which could be a separate switch from your 10GbE ToR switch.

EVO:RAIL supports four types of traffic: Management, vMotion, VSAN and VM Traffic and it is best (although not required) to have them on separate VLANs for all other then management as version 1.0 doesn’t put management traffic on a VLAN. vMotion traffic is limited to 4Gbps so it doesn’t consume all bandwidth. Strangely VMware has decided to use Active/Standby uplinks with VM, vMotion and Management traffic Active using vmnic0 and VSAN using vmnic1 rather than all uplinks being active and NIOC handling the allocation.

Storage

A single VSAN datastore is created from all local HDDs on each ESXi host in a EVO: RAIL cluster. SSD capacity is used for VSAN read caching and write buffering. Each EVO:RAIL appliance has 16TB total storage capacity including the HDDs and SSD cache and gives you about 13TB usable per appliance. The pre-provisioned management VM is 30G.

EVO:RAIL not only introduces a new hardware appliance but a new management addition to simply deployment and management covered in EVO: Rail – Management Re-imagined

Recent Comments