AWS re:Invent 2017: Scaling Up to Your First 10 Million Users – ARC201

Benjamin Thurgood from AWS

Benjamin Thurgood from AWS

Cloud is awesome, you can scale your web app or site on demand! But where do you start when you’re planning for bi g numbers of users. Making some design choices up front can dramatically simplify your life later.

Benjamin showed how best to link together different AWS services and using the capabilities of the AWS platform.

Autoscaling is only the beginning, there’s a lot more to look at.

Use Native Sevices

First step is to use the AWS Global Infrastructure with Edge locations. Take advantage of the global AWS platfom so you don’t have to reinvent the wheel.

Many native features available like Cloudfront, S3, DynamoDB, EFS etc.

EC2, EBS, RDS etc are scalable but you need to work a little harder.

Lightsail is an easy way to get started as a developer.

You can scale up but you’ll reach an end some time.

Split it out

First thing to do is separate your web tier and your database tier to scale independently. You can self manage via something you install on EC2 or go for a fully managed one with RDS, DynamoDB or Redshift. Aurora scales storage up to 64 TB with 15 read replicas, incremental backups to S3 and 6-way replication.

Start with SQL rather than NoSQL as its established with lots of existing code

Use Amazon Cognito for authentication which I had used in my workshop yesterday, Build a Multi-Region Serverless Application for Resilience and High Availability Workshop

As you scale, introduce a load balancer across AZs with multiple web instances and RDS DB standby and read replica instances.

Application Load Balancer is recommended for web servers and has good health checking for your application as well as content based routing.

if you have extreme performance requirement then use the Network Load Balancer as it works at layer 4.

This will help you scale up to 100,000 users.

For more scale, service serve static web pages from S3 via CloudFront. S3 is highly durable, great for static assets with encryption at rest and in transit

Cloudfront is for caching content which can reduce latency and response times and also server load.

To go further you can cache database content with ElastiCache which is managed Memcached or Redis.

DynamoDB is also a good idea for storing session data from your web tier session information.

Use Database migration service to migrate data across the AWS system possibly with no downtime.

DynamoDB accelerator is a cache for DynamoDB for reads.

At this stage you’ve lightening your web tier by spreading it out.

You can then revisit Autoscaling which uses CloudWatch metric to increase/decrease your web tier. It’s more effective with your now lighter web tier if your back end services are scaling differently with better databse use.

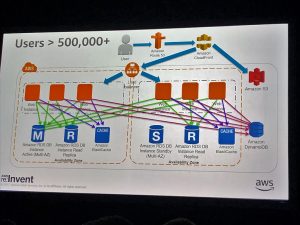

You’re now good for up to 500,000 users.

You can now start adding in some more automation for things that don’t natively scale. Use higher level services like Elastic Beanstalk or OpsWorks (with Chef or Puppet) or do it yourself with CloudFormation or EC2. Basically have a system that you can automatically build more infrastructure easily.

CodePipeline should also be looked at using CodeCommit, CodeBuild and CodeDeploy for a fully managed CI/CD Pipeline.

AWS CodeStar is a quick start for developing applications.

Of course if you have not done already, you need to build in monitoring, metrics and logging to know what’s going on in the inside as well as user experience.

Use CloudWatch percentiles as it helps missing a performance issue if you’re just looking at averages.

More than 500,000

You need to start looking at the things that are running in EC2 instances and breaking them up into microservices. Use the ideas of Service Orientated Architecture for loose coupling and serverless so you don’t reinvent the wheel.

Then onto event driven computing for serverless with Lambda which is very very scalable and relatively easy compared to scaling things with servers in EC2 instances.

Lots of ways to do this using API Gateway with all the other services Lambda can use and some client framework running in your browser rather than server side.

AWS X-Ray is a way to identify performance bottlenecks and errors to pinpoint issues.

Onto more than 1 million users uses a bit of all the other things talked about.

- Use Multi-AZ

- Elastic load balancing

- Auto scaling

- Service Orientated Architecture

- Service content smartly from S3/CLoudFront

- Caching of DB

- Moving state off tiers than auto scale.

Next: its often database issues that cause contention.

Solve it with:

- Federation: Splitting into multiple DBs based on function/purpose.

- Sharding: Splitting one dataset up across multiple hosts to say separate users by name .

- Shift to NoSQL: For some functionality like leaderboards/scoring, rapid ingest of streams, hot tables and metadata/lookup tables.

This was an overview session and a walk through of many AWS services but it was useful for people to see how large the AWS ecosystem is and what’s available natively from AWS so you don’t have to do it yourself. It had a LOT of information and was well presented. A good session to have in your head as you’re planning anything so you have a scale roadmap.

Recent Comments