AWS re:Invent 2017: Become a Serverless Black Belt: Optimizing Your Serverless Applications – SRV401

Ajay Nair from AWS and Peter Sbarski from A Cloud Guru

Another session on architectural best practices and a bunch of handy little things to help you out. It was advanced so no overviews required about “what is serverless”.

Multiple Points to Optimise

For normal optimisation with traditional application stacks you actually pack things together but for serverless you do the opposite, as its generally better and more scalable if things are spread out.

There are three components to look at, the interface via API Gateway or Alexa, the compute with Lambda and the data with S3, DynamoDB etc.

The main goal is to try and reduce latency which doesn’t just take time but also costs you more. The more functions you are stringing together the more latency issues will bug you.

The Lean Function

Anatomy of a function = the function + language runtime + functional container + compute substrate

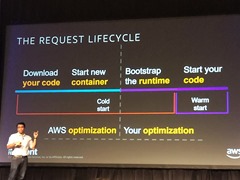

When function invokes it:

- downloads your code

- starts new container

- bootstraps the runtime

- starts the code.

Everything before start code is cold start. AWS optimises stages 1 and 2 (and have had 80% improvement in latency for some scenarios) and your job is to optimise 3 and 4.

Try to make your logic as concise as possible:

- efficient / single purpose code

- avoid fat / monolithic functions

- control the dependencies in the package

- optimise for your language

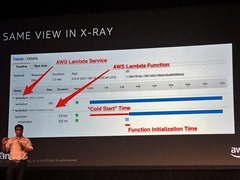

You can also see the start times in X-Ray.

For Java avoid using the whole SDK aws-java-sdk directly, rather use the subcomponent aws-java-sdk-s3 or aws-java-sdk-dynamodb.

This reduces your dependency size

Ephemeral Environment

Generally each function is a single process per container but there is sometimes multi-threading.

- < 1.8 GB is still single core

- > 1.8 GB is multi-core, workloads are CPU bound so need to be written multi-threaded

- I/O bound workloads will likely see gains eg. parallel calculations.

Containers are reused, so don’t lazily load variables in the global scope.

Don’t load it if you don’t need it, cold starts are affected.

Concise Function Logic

Separate Lambda handler (entry point) from core logic

Use functions to transform, not transport.

Read only what you need

Try to reduce the amount you send to Lambda, use query filters in Aurora or the new S3 Select. This can dramatically reduce the length of your function run time which directly impacts cost.

Don’t wait for input in your function so you’re not then waiting for idle.

Use smart resource allocation to match memory resource allocation (now up to 3Gb) to logic. Sometimes its worth allocating more memory to speed up the function yet you pay the same, do some testing.

There is a Step Functions state machine generator for AWS Lambda Power Tuning which is a step function to try different memory sizes to see what the effects would be, this should be part of your testing.

Ideally don’t have orchestration within your code, this is a waste of money waiting for idle. Rather take the orchestration out of the function and use separate functions for the pre and post processing

Eventual Invocation

Succinct payloads, resilient routing, concurrent execution

If using AWS services there is automatic retrying if there’s an issue getting data from S3 for example. If its not a native AWS service, say you’re getting data from an IoT device you need to decide how to scale invocation.

Use a suitable entry point for client applications

- custom client – use the aws-sdk

- not end user facing, use regional endpoints on API Gateway

Discard uninteresting events ASAP before they get to Lambda. This means you only send to Lambda stuff that you want Lambda to do something with rather than get Lambda to do the discarding as you pay per invocation. Use S3 event prefix or SNS message filtering.

Scrutinise your invocations. Each invocation must have provenance “what happened for this notification to occur”.

Remember there are payload constraints, async is only 28K

Maybe switch to binary payloads which can sometimes have 40% smaller load compared to JSON.

Concurrency

You need to think about concurrency, the number of transactions per second. Latency has an impact on concurrency, the longer your function runs the less concurrency you have. You may need to also increase the stream shards to help concurrency.

Resiliency

You should understand retry policies:

- sync never retires

- async retries 2 times

- streams are retried all the time

You may need to persist your events so perhaps create an event store or Dead Letter Queue so things don’t get lost for retries or state. You can then replay from the event store via SNS or SQS and have more visibility into what failed. Use Kinesis as the bridge to Lambda although with durability there is a trade-off as you now have another to manage.

Remember, retries count as invokes.

Always configure a minimum of of 2 Availability Zones.

Give Lambda functions their own subnets in a large IP range to handle potential Scale. This means you won’t drain the subnet for other services.

Coordinated Calls

Decoupled via APIs

Use APIs as contracts so for example split out ingestion service, metadata service and front end service.

Scale-Matched Downstream

For non API calls such as DB calls you need scale matching and concurrency controls

Basically you need to match the throughput that your back-end system can handle to what lambda can send to it for processing. You can cap concurrency to have some control and not blow up your databases if you have the wrong instance size.

Secured

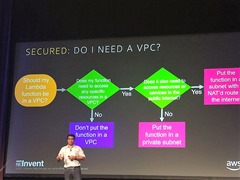

Lambda security needs to be thought through for remote access. Should my lambda function be in a VPC?

If your function needs specific resources in a VPC, then give it access. Think about external access and if you need internet access put the function in a subnet with a NAT route to the internet. If it doesn’t a private subnet will do.

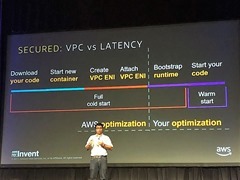

There is a cold start time increase if you need to cross VPCs as the ENIs need to be created and added.

Serviceful Operations

Peter Sbarski from A Cloud Guru then came on stage to talk about it

Lamdba is very clever technology but you need to train your people to take advantage of it.

A Cloud Guru invokes about 6.2 million functions in a week, they have 480 lambda functions across 15 environments with 142 S3 buckets and 7TB of data weekly coming via from CloudFront.

All this means that optimising the functions save them a lot of money.

He suggested three things to help

Automated Operations

Automate all the testing.

There is no such thing as NoOps though, there is still a big role for people which he mentioned a number of times.

Monitored Applications

Monitor as much as you can, X-Ray is very useful as well as CloudTrail.

hit everything to check, they use Runscope.

For security you mustn’t forget with serverless, IAM rules need to be as tight as they can be, ideally a rule per function. Use KMS for secrets management.

You need to think of normal operational security, Could you inject rogue code into Lambda? How are you checking you aren’t using components with known vulnerabilities. All dependencies need to be checked.

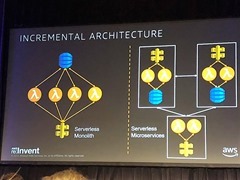

Serverless is inevitably about creating an incremental architecture.

You will start with a serverless monolith, multiple lambda functions running to a single database. Once you scale up you then need to use multiple databases with separate functions.

Another interesting point back to the human angle is if you initiate a serverless project at work you will likely get developers fired up and engaged who would love to work on it.

A very deep dive session with plenty of tips and tricks to manage your serverless architecture which ultimately saves you money.

Recent Comments