AWS re:Invent 2018: Supercharge VMware Cloud on AWS Environments with Native AWS Services – CMP360

Andy Reedy, Aarthi Raju and Wen Yu from AWS

Being a long time VMware customer, I’m very interested in what VMware Cloud on AWS (VMConAWS) could offer. Its early days and I’m not sure what customer take-up has been so far but there is certainly industry buzz, attention and interested customers. Its VMware’s current big push to be seen as a cloud company. One of the criticisms levelled at VMConAWS is that its just a glorified colo, moving your current virtualisation layer from an on-prem or existing colo to another colo, this time hosted by AWS. AWS, however, isn’t just another colo!

One of the obvious big benefits with hosting your VMware workloads is being close to the rest of what AWS offers. This could be the hook to help you decide to use the service as VMConAWS comes with built-in integration with native AWS services. Are these integrations just a way to get you to use more AWS when you’re a VMware customer and in time shrink your VMware investments as you take advantage of more AWS native services? Time will tell but the integrations with native AWS services could be very useful if you’re wanting to migrate and host your VMware workloads in a new “colo”.

Overview

The session started with an intro of the service highlighting the SDDC construct with NSX and VSAN, packaged as “vSphere as a Service” all ready to run workloads in 90 minutes. Use cases are cloud migration, data center extension, disaster recovery and app modernisation to use native AWS services. You can add host capacity in 12 minutes. It’s managed by VMware who do the patching, updates and upgrades. There’s also host remediation so if a host dies, AWS will replace it automatically. Clusters can be from three to 32 hosts with up to 10 cluster per SDDC. The benefit with the quick installation time means you can run your clusters much hotter so you’re not wasting space. The AWS hosts use I3 bare metal offering with NVMe for storage or the R5 instances which has even more storage. EBS backed VSAN also allows you up to 35 Tb per node.

Some poor quality photos but to show it looks just like SDDC and vCenter.

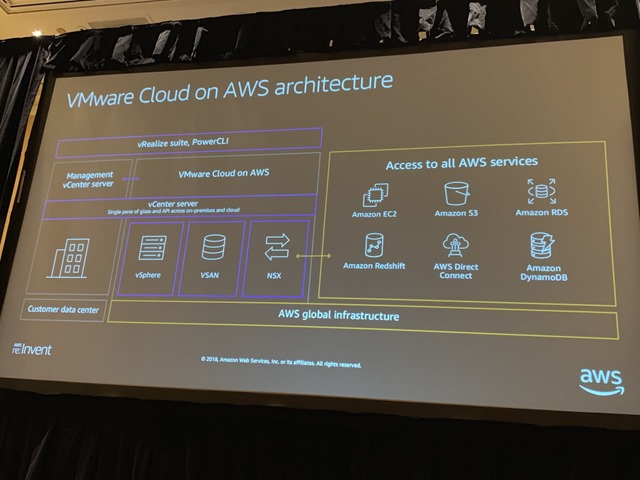

Architecture

Andy went through the architecture and showed what was part of VMware and what is part of AWS talking through Hybrid Linked Mode to see a view of your multiply datacenters connecting back to on-prem. A picture is a 1000 words!

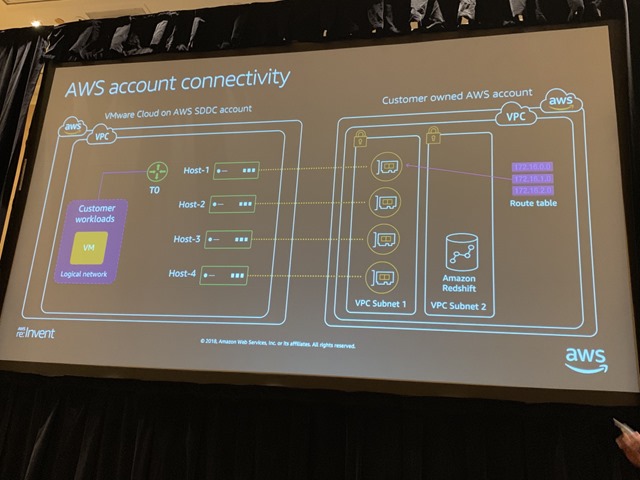

Elastic Network Interface

The ENI is the magic network connection that connects the VMware Cloud on AWS to your environment. When you set up VMConAWS, you specify which AWS account you want to link it to. If you’re more aware of AWS, it works similar to how RDS works. When you connect your VPC to RDS, an ENI is created automatically for connectivity. A single ENI is created for each host with an initial seed of 17 ENIs which expands automatically if you have more hosts. 17 has been picked as the average cluster size so there are enough IPs available on the subnet. It’s called cross-account ENI. There is no peering as AWS doesn’t do underlay and overlay networks as would be used with NSX.

Integration

Onto connecting VMware to native AWS services. Aarthi went through how the ENIs talk to NSX and manages the routing table which also supports failover. For multiple VPCs, a VPN connection can be used which can also now use BGP. There’s no network data charges if the two sides are in the same Availability Zone. You should pick your AZ subnets during setup carefully to avoid charges. With a stretched cluster, you want to pick your primary hosts in the same AZ as your workloads and you can also use VSAN locality but there are data charge considerations for failover.

Within AWS natively you can use a S3 VPC endpoint to avoid going over public IP space to connect to S3 storage. You can also go via the ENI to NSX for EFS. AWS DataSync which was announced uses an on-prem appliance which you write to which compresses and encrypts data before sending up to S3/EFS much faster (up to 10 times!).

Load-Balancing

A short demo of using an AWS load-balancer with am IP based target group to send data back to your VMware VMs on-prem or in VMConAWS. This is a great use case, AWS load-balancers are heavily used and you can also now use a Web Application Firewall to protect your VMware VM fronted with AWS load-balancing.

RDS on vSphere

I was hoping to get more information on RDS on vSphere which is was announced at VMworld this year but its not really part of VMware Cloud on AWS so wasn’t covered. This sounds like an exciting development, use your existing app layer wherever that runs and transition your on-prem database to Amazon RDS but still running on-prem. This is the first time AWS has decide to offer an on-prem service that isn’t IoT (Greengrass) or pure data ingestion (Snowball) focused.

RDS on vSphere has been touted as an AWS managed RDS instance running in your own-datacenter. Is it a hybrid setup with your on-prem vSphere environment acting as a new AWS AZ so your multi-AZ RDS instance spans an AWS AZ and your vSphere AZ or can you run Multi-AZ with multiple vSphere AZs without a copy on an AWS AZ.

Once thing I’m very much looking forward to is any connectivity between VMConAWS and Lambda.

This was a chalk talk which is more interactive than a normal session with plenty of questions which means we didn’t cover as many native AWS service use cases as I had hoped but it seemed a real help for many people to get their questions answered, particularly about data transfer charges.

Recent Comments