Virtualisation Field Day 4 Preview: Scale Computing

Virtualisation Field Day 4 is happening in Austin, Texas from 14th-16th January and I’m very lucky to be invited as a delegate.

I’ve been previewing the companies attending, have a look at my introductory post: Virtualisation Field Day 4 Preview.

Scale Computing has presented previously at Storage Field Day 5, this is their 1st Virtualisation Field Day but they must be keen to get their message out as they’ve already signed up for Virtualisation Field Day 5.

Scale Computing has presented previously at Storage Field Day 5, this is their 1st Virtualisation Field Day but they must be keen to get their message out as they’ve already signed up for Virtualisation Field Day 5.

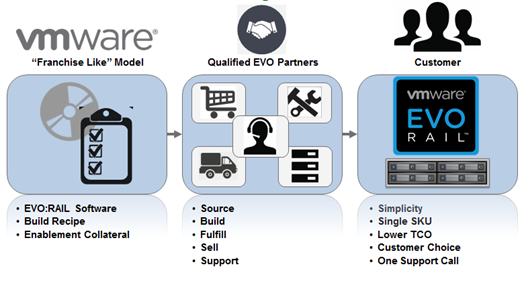

Scale Computing is another member of the hyper-converged space along with Nutanix, SimpliVity and VMware’s EVO:RAIL. They have been shipping hyper-converged as long as SimpliVity but are less well known. Their management provenance is from Avamar (now EMC), Double-Take, Seagate, Veritas and Corvigo.

The writing is on the wall that converged and hyper-converged will be the only way you purchase infrastructure in the future. Why waste time rolling your own? There is therefore plenty of opportunity for a massive market. Scale Computing started life as a scale-out storage platform and then added compute.

Scale Computing has a hyper-converged appliance called HC3 running on KVM so offering an alternative to the behemoth that is VMware and Microsoft. The HC3 name comes from Hyper-Converged 3 (being 1:servers, 2:storage and 3:virtualisation). Their marketing is all about reducing cost and simplifying virtualisation complexity and is ideal for those who haven’t adopted virtualisation due to cost and complexity or are looking for a new alternative and reduced cost. They generally target SMB size workloads but this can still grow fairly large.

Recent Comments