AWS re:Invent 2018: A Serverless Journey: AWS Lambda Under the Hood – SRV409

Marc Brooker & Holly Mesrobian from AWS

Marc Brooker & Holly Mesrobian from AWS

This looked like a super interesting session as AWS doesn’t often let you peek under the hood of how it runs its infrastructure. Of all the AWS services, Lambda is arguably the most interesting under the hood as the whole point of Lambda is you don’t have to worry about what’s underneath that hood and there’s a big engine!

Running Highly Available Large Scale systems is a lot of work.

- needs load-balancing

- scale up and down

- handling failures

When you use Lambda as part of a serverless platform, you don’t need to provision, manage, and scale any servers although the servers are obviously there. As a developer you don’t need to concern yourself with any of the undifferentiated heavy lifting but there’s a very sophisticated architecture underneath to make that abstraction work.

Holly and Marc went through how AWS designed one of its fastest growing services. Lambda processes trillions of requests for customers across the world.

We all know that underpinning Lambda is containers historically and now also with Firecracker, a Micro-VM. AWS handles the deployment, availability and scaling of all these containers. Lambda functions fire off when there’s an event trigger.

Once the Event trigger is hit, Lamdba injects your custom node into one of these containers and it runs. Depending on what happens in the containers, the container may shut down or be used again for another NodeJS function within your Amazon account. As your code may contain libraries that need to be imported across the network as well as your code itself, it can take a little while for the container to actually get your code and run. Containers that use Java with JVMs also need to load into memory and this can take a few seconds depending on your dependencies.

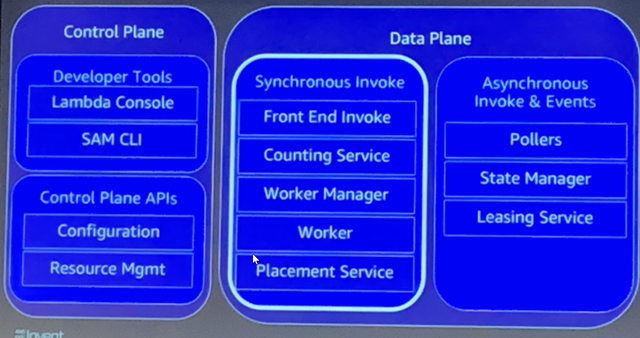

Lambda architecture

Counting service: provides a region wide view of customer concurrency to help enforce set limits (soft limits). Designed for high through-put and low-latency

Worker Manger: tracks container idle and busy state and schedules incoming invoke requests to available containers. Shuts down idle containers, optimise for running of code on a warm sandbox.

Worker: Provisions a secure environment for cluster code execution. Creates & manages a collection of sandboxes, manages multiple language runtimes. Tells the Worker Manager when it has provisioned a sandbox.

Placement Service: Places sandboxes on workers to maximise packing density without impacting customer experiencce or cold-point latency

Load Balancing

Routing traffic across hosts distributed across AZs. Invoke goes through Front End, sent to reserved sandbox if it exists or deploys a sandbox to a worker manager and initiated on a Worker and its invoked with your code.

Auto Scaling

Provision function capacity when needed and releasing when not needed.

Workers are recycled when its lease expires, normally 6 hours.

Handling failures

Handling host and AZ failures

With Lambda, always have a healthy host.

Isolation

Keeping Workloads Safe and Separate

Isolation is for security and operational concern such as for consistent performance.

Multiple Guests OS on a box, using a hypervisor, never use a sandbox across multiple functions, boundary across accounts is VMs.

Firecracker

Firecracker adding much better utilisation for isolation. A single function executes in a single Firecracker micro-VM. Functions no longer share a container.

Information on the hardware device emulation with Firecracker, very quick to add virtual hardware.

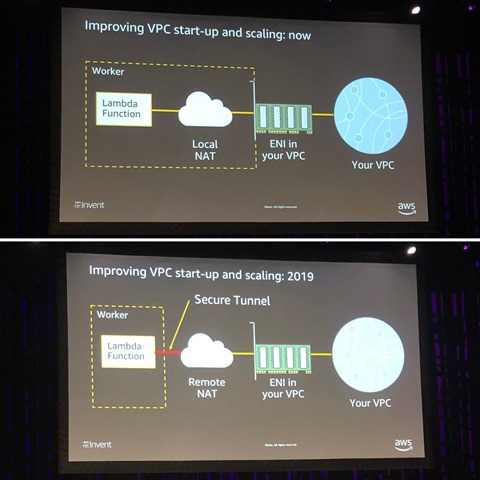

Coming with Lambda in 2019 = improving VPC start-up scaling, removing ENI from Worker to remote NAT, shared ENI between workers.

Utilisation

Keeping servers busy = % of resources doing useful work. Want as much resources used for your code. With Lambda you only pay for useful work. you don’t need to worry about utilisation is AWSs problem, they do the optimisation to keep servers busy. They use statistical multiplexing to put different (uncorrelated) workloads on a server, using the same workloads will have the have resource profile and then saturate something.

There was so much more technical detail for this session, its worth watching the recording,I couldn’t get everything down in time!

Recent Comments