I was recently invited to do an internal enterprise financial company presentation on serverless computing as part of a general what’s happening in IT series. There was a wider range of people than I expected who attended, some business people and some IT people.

The business lens

In the questions and feedback afterwards interestingly some of the business people could see some of the value more easily than the IT people. Business people liked the coming together of business logic and IT and could see the benefit of just encoding what they need doing in a serverless function without having to worry as much about all the IT infrastructure stuff behind the scenes. Although the business people weren’t coders, someone likened the approach to using Excel macros. Some fairly sophisticated Excel functions have graced the trading desks of many an organisation. They didn’t need to think about infrastructure with Excel Macros, Excel was just a platform you could code mathematical functions in. Sure, Excel macros had many issues, security, performance, availability etc. but they served the business need easily without having to get IT involved.

The IT lens

I then spoke to a development team leader afterwards. She’s very well versed in coding, a super smart algorithmic trading developer. She voiced valid concerns though that with serverless functions you couldn’t control the latency of the function and so she couldn’t see any use for them in their work. Part of the workflow they develop is low-latency trading, pricing and analytics which of course is very latency and performance sensitive. Some of the workflows include many steps necessary for compliance and auditing. A price range traded may need to be put into a database to reference later. A trade that is priced needs to be logged somewhere and a trade completed needs to go into another database which kicks off a whole other bunch of workflows to be reconciled in the back-office. She mentioned the low-latency algo stuff was working well but they sometimes struggled with performance and speed when it was a very busy trading day. Some of the compliance and auditing code sits very close compute wise to the low-latency code. This makes it simpler to code the end-to-end transactions but it means the most expensive physical server hardware low-latency compute cycles are also being “wasted” on compliance and auditing code which may struggle to keep up on an extra busy trading day. To improve this would generally require scaling up existing compute resources. The compliance and auditing data was also used by many other integrated systems so care needed to be taken so that the secondary databases could keep up with low-latency demand.

This made me think of two things, first of all how this application would of course benefit from some splitting up. The app could be changed for the low-latency code to push out the minimal amount of compliance and auditing information to another database, queue or even stream. A separate set of serverless functions could then very efficiently respond to an event or pick up these trades or prices and do whatever needs to be done (BTW, its not just functions that can be serverless, databases, queues and storage can be too!). This could also be massively scalable in parallel. 1 trade at a time or a million and this wasn’t latency sensitive stuff once the initial small record was created.

CAN use or CAN’t USE

Secondly was how the developer team leader was seeing how serverless functions COULD NOT be used for latency sensitive workloads but not seeing how useful they COULD be for all the rest of the compliance and auditing code. The low latency code was the most important so naturally her focus is on that.

The splitting up of the app is an architectural discussion and may not in fact be suitable in the end but the more important point is sometimes we are a little myopic and only see what a technology CAN’T do rather than looking at the bigger picture and seeing what it CAN do. This can distance you from the business. Oh, and of course, Excel can do a LOT!

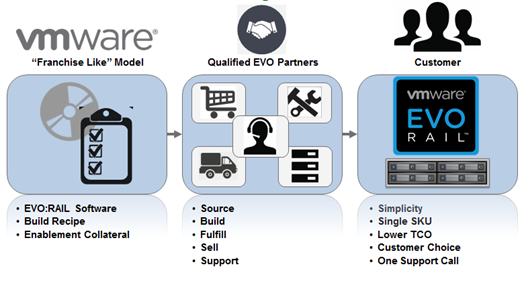

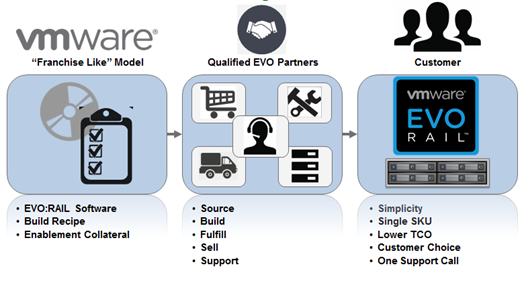

VMware has announced it is entering the hyper-converged appliance market in conjunction with hardware partners for them to ship pre-built hardware appliances running VMware software. See my introduction, VMware Marvin comes alive as EVO:Rail, a Hyper-Converged Infrastructure Appliance.

Each of the four compute nodes within the 2U appliance has a very specific minimum set of specifications. Some hardware vendors may go above and beyond this by adding say GPU cards for VDI or more RAM per host but VMware wants a standard approach. These kinds of servers don’t exist on the market currently other than what other hyper-converged companies whitebox from say SuperMicro so we’re talking about new hardware from partners.

Each of the four compute nodes within the 2U appliance has a very specific minimum set of specifications. Some hardware vendors may go above and beyond this by adding say GPU cards for VDI or more RAM per host but VMware wants a standard approach. These kinds of servers don’t exist on the market currently other than what other hyper-converged companies whitebox from say SuperMicro so we’re talking about new hardware from partners.

Each of the four EVO: RAIL nodes within a single appliance will have at a minimum the following:

- Two Intel E5-2620v2 six-core CPUs

- 192GB of memory

- One SLC SATADOM or SAS HDD for the ESXi boot device

- Three SAS 10K RPM 1.2TB HDD for the VSAN datastore

- One 400GB MLC enterprise-grade SSD for read/write cache

- One VSAN certified pass-through disk controller

- Two 10GbE NIC ports (either 10GBase-T or SFP+ connections)

- One 1GbE IPMI port for out-of-band management

Each appliance is fully redundant with dual power supplies. As there are four ESXi hosts per appliance, you are covered for hardware failures or maintenance. The ESXi boot device and all HDDs and SSDs are all enterprise-grade. VSAN itself is resilient. EVO: RAIL Version 1.0 can scale out to four appliances giving you a total of 16 ESXi hosts, backed by a single vCenter and a single VSAN datastore.There is some new intelligence added which automatically scans the local network for new EVO:RAIL appliances when they have been connected and easily adds them to the EVO: RAIL cluster.

Read more…

Categories: ESX, Scale, Storage, vCenter, VDI, VMware, VMworld Tags: EVO, networking, storage, vcenter, vmware, vmworld, VSAN

VMware has announced it is entering the hyper-converged appliance market in conjunction with hardware partners for them to ship pre-built hardware appliances running VMware software. See my introduction, VMware Marvin comes alive as EVO:Rail, a Hyper-Converged Infrastructure Appliance.

The EVO: RAIL management software has been built to dramatically simplify the deployment of the appliances as well as provisioning VMs. The user guide is only 29 pages so you can get an idea of how VMware is driving simplicity. Marvin actually exists as a character icon within the management interface with an embedded “V” and “M”.

The EVO: RAIL management software has been built to dramatically simplify the deployment of the appliances as well as provisioning VMs. The user guide is only 29 pages so you can get an idea of how VMware is driving simplicity. Marvin actually exists as a character icon within the management interface with an embedded “V” and “M”.

VMware recognises that vCenter has had a rather large feature bloat problem over the years. They have introduced new components like SSO which do provide needed functionality but add to the complexity of deploying vSphere. VMware has also tried to bring all these components together in the vCenter Server Appliance (VCSA).

This is great but has some functionality missing compared to the Windows version like Linked-Mode and some customers worry about managing the embedded database for large deployments. As EVO:RAIL is aimed at smaller deployments and isn’t concerned with linking vCenters together, the VCSA is a good option and the EVO:RAIL software is in fact a package which runs as part of the VCSA. There is no additional database required, it is all built into the appliance and uses the same public APIs to communicate with vCenter but acts as a layer to provide a simpler user experience, hiding some of the complexity of vCenter. vCenter is still there so you can always connect directly with the Web Client and manage VMs as you do normally and any changes made in either environment are common so no conflicts.

EVO:RAIL is also also written purely in HTML5 even for the VM console, no yucky Flash like the vSphere Web Client and it works on any browser, even an iPad. Interestingly is has a look which is a little similar to Microsoft Azure Pack. Who would ever have thought VMware would have written a VM management interface built for simplicity that is similar to an existing Microsoft one!

Read more…

Categories: ESX, Scale, Storage, vCenter, VDI, VMware, VMworld Tags: EVO, networking, storage, vcenter, vmware, vmworld, VSAN

VMware will announce shortly today at VMworld US that it is entering the hyper-converged appliance market with a solution called EVO:Rail. This has been rumoured for a while since an eagle eyed visitor to the VMware campus spotted a sign for Marvin in their briefing center. Marvin was the engineering name and has still stuck around in parts of the product but its grown up name is EVO:Rail.

VMware will announce shortly today at VMworld US that it is entering the hyper-converged appliance market with a solution called EVO:Rail. This has been rumoured for a while since an eagle eyed visitor to the VMware campus spotted a sign for Marvin in their briefing center. Marvin was the engineering name and has still stuck around in parts of the product but its grown up name is EVO:Rail.

EVO(lution) is eventually going to be a suite of products/solutions, Rail is the first announcement named for the smallest part of a data center rack, the rail, so you can infer that VMware intends to build this portfolio out to an EVO:RACK and beyond.

EVO:Rail combines compute, storage and networking resources into a hyper-converged infrastructure appliance with the intention to dramatically simplify infrastructure deployment. Hardware wise this is pretty much what Nutanix and Simplivity as two examples do today. Spot the acronym, HCIA, to hunt for newly added VMworld sessions.

VMware is not however entering the hardware business itself, that would kill billions of marketing budget spent on the Software Defined Data Center message of software ruling the world. Partner hardware vendors will be building the appliance to strict specifications with VMware’s EVO:RAIL software bundle pre-installed and the appliance delivered as a single SKU. Some may see this as a technicality. VMware has always said if you need specific hardware you are not software defined. Does EVO:RAIL count as specific hardware?

Support will be with the hardware vendor for both hardware and software with VMware providing software support to the hardware vendor at the back-end.

Read more…

Categories: ESX, Scale, Storage, vCenter, VDI, VMware, VMworld Tags: EVO, networking, storage, vcenter, vmware, vmworld, VSAN

This post is the last in the series: Designing a Virtual Infrastructure that Scales.

I hope I’ve managed to give you some information on what you need to be considering when scaling your virtual environment but this series isn’t actually about giving you all the answers but rather to help you think about the questions you need to be asking to make sure you are getting the answers specific to your environment.

I hope I’ve managed to give you some information on what you need to be considering when scaling your virtual environment but this series isn’t actually about giving you all the answers but rather to help you think about the questions you need to be asking to make sure you are getting the answers specific to your environment.

So, in summary:

- Keep it simple!

- Engage everyone

- Do your research

- Do thorough planning

- Think big

- Think ahead

- Start small

- Create modular building blocks

- Make it the same everywhere

This all started as a presentation at the London VMware User Group meeting, #LonVMUG where I talked about some of the things you should be thinking about when scaling your virtual infrastructure.

Here are the links to all the posts:

Part 1, Taking Stock

Part 2, Speak to the People

Part 3, Scaling for VDI

Part 4, Designing Thinking

Part 5, So, after all that…

This post is the fourth in the series: Designing a Virtual Infrastructure that Scales.

In Part 3, Scaling for VDI, I went through some of the considerations specific to VDI and talked about how big your environment can get if you decide to go VDI.

In this post it’s time to talk about actual infrastructure design and start thinking and planning for how to handle scale.

In this post it’s time to talk about actual infrastructure design and start thinking and planning for how to handle scale.

In Part 1, Taking Stock, I talked about how the virtual hosting environment you built a few years ago may be starting to get a little unwieldy to manage. I suggested: “Now is the time to pause if you can and take stock. Have a good look at your current environment and then zoom out and look at the big picture to plan the next stage of your virtual infrastructure because if you don’t you may find it running away from you.”

Hopefully by now you have an idea of what you actually need to virtualise. You’ve identified the number of servers and workstations, what resources they will require, who is going to be accesssing them, from where and at what times. You should know which VMs require business recovery and to where. You have done some calculations on how many hosts you will need to host your VMs and planned failover capacity for HA and DRS. You have an idea of VM network requirements, storage space and IOPS required.

Now is the time to use all that information gathering and see how you can build an infrastructure to run it all.

Read more…

This post is the third in the series: Designing a Virtual Infrastructure that Scales.

In Part 2, Speak to the people, I went through some communication ideas to involve more people in the information gathering stage to ultimately be able to put together a better infrastructure

In this post I’m tackling the things you need to be thinking about when you consider VDI.

In this post I’m tackling the things you need to be thinking about when you consider VDI.

So…VDI, the promised solution to all your IT needs. No PCs on desks, thin clients, zero clients, happy clients, automated provisioning, stateless VMs, thin apps, streaming.

VDI is certainly a very different way of delivering IT to clients, it can be seen as terminal services on steroids, using some of the benefits of shared resources but allowing separation when you need it.

Why is VDI any different from just having VM workstations which people connect to?

Read more…

This post is the second in the series: Designing a Virtual Infrastructure that Scales.

In Part 1, Taking Stock, I talked about looking at your current environment and seeing where you need to get to by doing a bit of crystal ball future capacity planning combined with understanding your current infrastructure limitations.

In this post I’m going to talk about something that isn’t done enough in infrastructure projects and that’s actually talking to real life people.

Many enterprise IT departments are pretty big places aften spread across the globe working in different timezones in multiple separate vertical silos. You quite possibly may not have met everyone in your own team let alone know what everybody else does in your office.

If you’re going to think about an infrastructure change to support a much bigger virtual environment, isn’t it worth looking at the really super-duper-bigger picture. Unless you know anything and everything (and there are some of you that may do!) you really should be getting other people involved from the very beginning to see if they would like to jump on the bandwagon and make changes to their environment for the greater good.

Read more…

I recently had the privilege of presenting at the London VMware User Group meeting, #LonVMUG where I talked about some of the things you should be thinking about when scaling your virtual infrastructure.

I’ve turned part of the presentation into a series of posts, going through some of the aspects you should be considering when your virtual environment demands bigger, better, faster, more!

Part 1, Taking Stock

Part 2, Speak to the People

Part 3, Scaling for VDI

Part 4, Designing Thinking

Part 5, So, after all that…

Designing a Virtual Infrastructure that Scales: Part 1, Taking Stock

Read more…

Recent Comments