Anyone who manages or architects a virtualisation environment battles against storage performance at some stage or another. If you run into compute resource constraints, it is very easy and fairly cheap to add more memory or perhaps another host to your cluster.

Being able to add to compute incrementally makes it very simple and cost effective to scale. Networking is similar, it is very easy to patch in another 1GB port and with 10GB becoming far more common, network bandwidth constraints seem to be the least of your worries. It’s not the same with storage. This is mainly down to a cost issue and the fact that spinning hard drives haven’t got any faster. You can’t just swap out a slow drive for a faster one in a drive array and a new array shelf is a large incremental cost.

Sure, flash is revolutionising array storage but its going to take time to replace spinning rust with flash and again it often comes down to cost. Purchasing an all flash array or even just a shelf of flash for your existing array is expensive and a large incremental jump when perhaps you just need some more oomph during your month end job runs.

Sure, flash is revolutionising array storage but its going to take time to replace spinning rust with flash and again it often comes down to cost. Purchasing an all flash array or even just a shelf of flash for your existing array is expensive and a large incremental jump when perhaps you just need some more oomph during your month end job runs.

VDI environments have often borne the brunt of storage performance issues simply due to the number of VMs involved, poor client software that was never written to be careful with storage IO and latency along with operational update procedures used to mass updates of AV/patching etc. that simply kill any storage. VDI was often incorrectly justified with cost reduction as part of the benefit which meant you never had any money to spend on storage for what ultimately grew into a massive environment with annoyed users battling poor performance.

Large performance critical VMs are also affected by storage. Any IO that has to travel along a remote path to a storage array is going to be that little bit slower. Your big databases would benefit enormously by reducing this round trip time.

FVP

Along came PernixData at just the right time with what was such a simple solution called FVP. Install some flash SSD or PCIe into your ESXi host, cluster them as a pooled resource and then use software to offload IO from the storage array to the ESXi host. Even better, be able to cache writes as well and also protect them in the flash cluster. The best IO in the world is the IO you don’t have to do and you could give your storage array a little more breathing room. The benefit was you could use your existing array with its long update cycles and squeeze a little bit more life out of it without an expensive upgrade or even moving VM storage. FVP the name doesn’t stand for anything by the way, it doesn’t stand for Flash Virtualisation Platform if you were wondering which would be incorrect anyway as FVP accelerates more than flash.

Read more…

Many companies trying to take advantage of cloud computing are embracing the moniker of the “Software Defined Data Center” as one way to understand and communicate the benefits of moving towards an infrastructure resource utility model. VMware has taken on the term SDDC to mean doing everything in your data center with software and not requiring any custom hardware. Other companies sell “software-defined” products which do require particular hardware for various reasons but the functionality can be programmatically controlled and requested all in software. Whether your definition of “software-defined” mandates hardware or not the general premise (nothing to do with premises!) is being able to deliver and scale IT resources programmatically.

This is great but I think SDDC is just a stepping stone to what we are really trying to achieve which is the “Policy Defined Data Center”.

This is great but I think SDDC is just a stepping stone to what we are really trying to achieve which is the “Policy Defined Data Center”.

Once you can deliver IT resources in software, the next step is ensuring those IT resources are following your business rules and processes, what you would probably call business intelligence policy enforcement. These are the things that your business asks of IT partly for regulatory reasons like data retention and storing credit cards securely but also encompasses a huge amount of what you do in IT.

Here are a few examples of what kinds of policies may you have:

- Users need to change their passwords every 30 days.

- Local admin access to servers is strictly controlled by AD groups.

- Developers cannot have access to production systems.

- You can only RDP to servers over a management connection.

- Critical services need to be replicated to a DR site, some synchronously, others not.

- Production servers need to get priority over test and development servers.

- Web server connections need to be secured with SSL.

- SQL Server storage needs to have higher priority over say print servers.

- Oracle VMs need to run on particular hosts for licensing considerations.

- Load balanced web servers need to sit in different blade chassis in different racks.

- Your trading application needs to have maximum x latency and minimum y IOPS

- Your widget application needs to be recoverable within an hour and no be more than 2 hours out of date.

- Your credit card database storage needs to be encrypted

- All production servers need to be backed up, some need to be kept for 7 years.

Read more…

HP has updated its ESXi customised images to reflect the recent release of ESXi 5.5 as well as its September 2013 Service Pack for Proliant.

HP’s customised images are fully integrated sets of specific drivers and software that are tested to work together. You can see the list of Driver Versions in HP supplied VMware ESX/ESXi images.

I have done an extensive update of my HP Virtual Connect Flex-10 & VMware ESX(i) pre-requisites post which includes these new customised images.

HP Custom Image for VMware ESXi 5.5.0 GA – September 2013:

HP Custom Image for VMware ESXi 5.1 Update 1 – September 2013:

The new and updated features for the HP vSphere 5.5 /5.1 customised Images for September 2013 include:

- Provider Features

- Report Smart array driver name and version.

- Report SAS driver name and version.

- Report SCSI driver name and version

- Report Firmware version of ‘System Programmable Logic Device’.

- Report SPS/ME firmware.

- Added SCSI HBA Provider.

- Report IdentityInfoType and IdentityInfoValue for PowerControllerFirmware class.

- IPv6 support for OA and iLO.

- Report Memory DIMM part number for HP Smart Memory.

- Added new ‘Test SNMP Trap’.

- Updated reporting of memory configuration to align with iLO and health Driver.

- AMS features

- Report running SW processes to HP Insight Remote Support.

- Report vSphere 5.5 SNMP agent management IP and enable VMware vSphere 5.5 SNMP

- agent to report iLO4 management IP.

- IML logging for NIC, and SAS traps.

- Limit AMS log file size and support log redirection as defined by the ESXi host parameter:

- ScratchConfig.ConfiguredScratchLocation

- Utilities features

- HPTESTEVENT – New utility to generate test WBEM indication and test SNMP trap.

- HPSSACLI – New utility to replace hpacucli

- HPONCFG – HPONCFG utility, displays the Server Serial Number along with the Server Name when using hponcfg –g switch, to extract the Host System Information.

VMware has announced its latest update to version 5.5 of its global virtualisation powerhouse, vCloud Suite.

To read the updates for all the suite components, see my post: What’s New in vCloud Suite 5.5: Introduction

vCloud Networking and Security has been updated with two networking enhancements, LACP and flow based marking & filtering.

vCloud Networking and Security has been updated with two networking enhancements, LACP and flow based marking & filtering.

Link Aggregation Control Protocol (LACP) is used to bond your physical network uplinks together to increase bandwidth, have better load balancing and improve link level redundancy. vSphere5.1 supported a simplified version of LACP with support for only a single Link Aggregation Group (LAG) per host and not much choice of load balancing algorithms.

LACP in 5.5 gives you over 22 load balancing algorithms and you are now able to create 32 LAGs per host so you can bond together all those physical Nics.

Flow based marking and filtering provides granular traffic marking and filtering capabilities from a simple UI integrated with VDS UI. You can provide stateless filtering to secure or control VM or Hypervisor traffic. Any traffic that requires specific QoS treatment on physical networks can now be granularly marked with COS and DSCP marking at the vNIC or Port group level.

Manageability has been enhanced in the vSphere Web Client with an object-policy based model.

Firewall Rule management has been made easier. You can now reuse vCenter objects in firewall rule creation and there is an option to create VM vNIC level rules with full visibility into the virtual network traffic via Flow Monitoring.

Upgrades

To upgrade vShield, you must first upgrade vShield Manager and then upgrade the other components in this order:

- vShield Manager

- vCenter Server

- Other vShield components managed by vShield Manager

- ESXi hosts

You can upgrade just vShield to 5.5 if you want and still run vCenter Server 5.1 and ESXi 5.0/5.1 hosts.

Categories: ESX, Update Manager, vCenter, vCloud Director, vCOPS, View, VMware, VMworld Tags: esxi, networking, vCD, vcenter, vcns, vmware, vmworld

VMware has announced its latest update to version 5.5 of its global virtualisation powerhouse, vCloud Suite.

To read the updates for all the suite components, see my post: What’s New in vCloud Suite 5.5: Introduction

VMware Virtual Flash (vFlash) or to use its official name, “vSphere Flash Read Cache” is one of the new standout feature of vCloud Suite 5.5.

VMware Virtual Flash (vFlash) or to use its official name, “vSphere Flash Read Cache” is one of the new standout feature of vCloud Suite 5.5.

vFlash allows you to take multiple Flash devices in hosts in a cluster and virtualises them to be managed as a single pool. In the same way CPU and memory is seen as a single virtualised resource across a cluster, vFlash does the same by creating a cluster wide Flash resource.

VMs can be configured to use this vFlash resource to accelerate performance for reads. vFlash works in write-through cache mode so doesn’t in effect cache writes in this release, it just passes them to the back-end storage. You don’t need to use in-guest agents or change the guest OS or application to take advantage of vFlash. You can have up to 2TB of Flash per host and all kinds of datastores are supported, NFS, VMDK and RDMs. Hosts can also use this resource for the Host Swap Cache which is used when the host needs to page memory to disk.

A VMDK can be configured with a set amount of vFlash cache giving you control over exactly which VM disks get the performance boost so you can pick your app database drive without having to boost your VM OS disk as well. You can configure DRS-based vFlash reservations, there aren’t any shares settings but this may be coming in a future release. vMotion is also supported, you can choose whether to vMotion the cache along with the VM or to recreate it again on the destination host. vSphere HA also is supported but when the VM starts the cache will need to recreate again on the recovery host.

Read more…

Categories: ESX, Update Manager, vCenter, vCloud Director, vCOPS, View, VMware, VMworld Tags: esxi, networking, storage, vcenter, vmware, vmworld

VMware has announced its latest update to version 5.5 of its global virtualisation powerhouse, vCloud Suite.

To read the updates for all the suite components, see my post: What’s New in vCloud Suite 5.5: Introduction

vCenter SSO gets one of the major updates. This is welcome news to anyone who installed SSO in vSphere 5.1 which was plagued with an overly complex and restrictive design. SSO in 5.1 was apparently an OEM component which VMware customised. SSO in 5.5 has been completely rewritten from the ground up internally.

vCenter SSO gets one of the major updates. This is welcome news to anyone who installed SSO in vSphere 5.1 which was plagued with an overly complex and restrictive design. SSO in 5.1 was apparently an OEM component which VMware customised. SSO in 5.5 has been completely rewritten from the ground up internally.

Evolving vCenter is a major undertaking as it was originally built as a monolithic platform with everything included in one place. VMware’s strategy is to pull out all the core central services from vCenter and have them run stand-alone.

In the future, vCenter may not in fact be the only management option. I can think of other future management options such as OpenStack or even Microsoft System Center or some other partner management ecosystem, all obviously at cloud scale. Today SSO has been re-built to scale serving vCloud Director, vCenter Orchestrator and Horizon View.

What’s New:

The whole architecture has been redesigned with a multi-master model with built-in replication both between and within sites. There are no longer primary and secondary SSO servers. Site awareness is part of the design, you can add new sites and SSO can be aware of the original site.

Read more…

Categories: ESX, Update Manager, vCenter, vCloud Director, vCOPS, View, VMware, VMworld Tags: esx, esxi, SSO, vCD, vcenter, vcops, view, vmware, vmworld

VMware has announced its latest update to version 5.5 of its global virtualisation powerhouse, vCloud Suite.

To read the updates for all the suite components, see my post: What’s New in vCloud Suite 5.5: Introduction

vCenter Server has been tweaked with this upgrade keeping its two deployment options, installed on a Windows Server or as an appliance.

VMware is strongly recommending using a single VM for all vCenter Server core components (SSO, Web Client, Inventory Service and vCenter Server) or to use the appliance rather than splitting things out which just add complexity and makes it harder to upgrade in the future.

What’s New:

vCenter

vCenter 5.5 on Windows has been made much more efficient with many performance improvements in the database and overall system. vCenter 5.5 on Windows now supports up to 1,000 hosts and 10,000 VMs. The vCenter Appliance has also been beefed up and with its embedded database supports 500 hosts and 5000 VMs or if you use an external Oracle DB (No MSSQL support planned) the supported hosts and VMs are the same as for Windows.

vCenter 5.5 on Windows has been made much more efficient with many performance improvements in the database and overall system. vCenter 5.5 on Windows now supports up to 1,000 hosts and 10,000 VMs. The vCenter Appliance has also been beefed up and with its embedded database supports 500 hosts and 5000 VMs or if you use an external Oracle DB (No MSSQL support planned) the supported hosts and VMs are the same as for Windows.

The vCenter 5.5 installation has changed the order of the simple install from 5.1 by swapping the order of the Web Client and Inventory Service installations so you have the Web Client available earlier on in the install chain for troubleshooting if something goes wrong.

IBM DB2 is no longer supported as a database for vCenter Server 5.5.

Read more…

Categories: ESX, Update Manager, vCenter, vCloud Director, vCOPS, View, VMware, VMworld Tags: esxi, vcenter, vmware, vmworld

VMware has announced its latest update to version 5.5 of its global virtualisation powerhouse, vCloud Suite.

VMware has announced its latest update to version 5.5 of its global virtualisation powerhouse, vCloud Suite.

I would say that this is an evolutionary rather than revolutionary update being the third major release in the vSphere 5 family (5.0,5.1,5.5).

There are however some significant storage additions such as Virtual SAN (VSAN) and VMware Virtual Flash (vFlash) as well as a new vSphere App HA to provide application software high availability which is in addition to vSphere HA.

VMware has also responded to the customer frustration over Single-Sign on (SSO) which is an authentication proxy for vCenter and made some changes to SSO to hopefully make it easier to deploy. Every component of the suite has been updated in some way which is an impressive undertaking to get everything in sync.

Here are all the details:

- What’s New in vCloud Suite 5.5: Introduction

- What’s New in vCloud Suite 5.5: vCenter Server and ESXi

- What’s New in vCloud Suite 5.5: vCenter Server SSO fixes

- What’s New in vCloud Suite 5.5: Virtual SAN (VSAN)

- What’s New in vCloud Suite 5.5: VMware Virtual Flash (vFlash)

- What’s New in vCloud Suite 5.5: vCloud Director

- What’s New in vCloud Suite 5.5: vCenter Orchestrator

- What’s New in vCloud Suite 5.5: vCloud Networking & Security

- What’s New in vCloud Suite 5.5: vSphere App HA

- What’s New in vCloud Suite 5.5: vSphere Replication and vCenter Site Recovery Manager

VMware is certainly evolving their strategy of the software defined data center, this release puts software defined storage (SDS) on the map at least from a VMware perspective, a multi-year project. VMware vVolumes hasn’t made it into this release which shows what a major undertaking it is, we will have to wait for vSphere 6!

SDS is going to have a huge push this year from VMware and of course all the other storage vendors, expect some exciting innovation.

Software defined networking is the next traditional IT infrastructure piece to “Defy convention” and is arguably by far the hardest one to change. Another multi-year project is just beginning.

Categories: ESX, Update Manager, vCenter, vCloud Director, vCOPS, View, VMware, VMworld Tags: esx, esxi, networking, storage, UpdateManager, vCD, vcenter, vcops, view, vmware, vmworld

HP has released a new firmware version 4.2.401.2215 of its Emulex OneConnect 10Gb Ethernet Controller which is used in HP G7 and Gen8 Blades.

The built in LOM adapter names on a G7 Blade server are NC553i or NC551i and the Mezz card is NC554m. The CNA LOM added to a Gen8 Blade server is HP554FLB.

This firmware is required if you are going to be using the new dual hop FCoE features in Virtual Connect 4.01.

The firmware update process is offline and requires you to boot from an Emulex OneConnect bootable .ISO which can be downloaded from:

http://h20000.www2.hp.com/bizsupport/TechSupport/SoftwareDescription.jsp?lang=en&cc=us&prodTypeId=329290&prodSeriesId=5033632&prodNameId=5033634&swEnvOID=54&swLang=8&mode=2&taskId=135&swItem=co-117280-1

The following issues have been resolved from the previous firmware version 4.2.401.605

- Fixed VMware PSOD ESX 4.1/5.0/5.1 seen on BL465cG7, BL685cg7 servers with BE2 based card. For more details see the following Customer Advisory

- Fixed FW memory leak with multiple logins to a redirected target

- Fixed FW to allow setting Speed/Duplex to Auto/Auto

- Fixed Fw to allow Bonding with Citrix platforms

- Fixed FW to allow upgrades of version 4.x to 4.x+ without requiring cold boot

- Fixed UEFI to not require 2nd reset

- Fixed UEFI driver version reported

- Fixed FW to prevent LOMS at 10G from negotiating 1G with Blade switches

- Fixed FW for BE3 to report correct values of ethernet stat “In Range” error

- Fixed FW to boot from secondary iSCSI when selected

- Fixed FW iSCSI boot failure from secondary target when primary target given is incorrect

- Fixed UEFI NIC default settings retention

- Fixed FW hang during boot with SLES 10 SP4

- Fixed FW error while performing Shared Uplink Set port remove/add test

I’ve updated my post: HP Virtual Connect Flex-10 & VMware ESX(i) pre-requisites

HP has released an advisory saying that with versions of the Emulex Nic driver firmware pre 4.1.450.7 Device Control Channel (DCC) may not work which could cause your blades with NC55x Nics to drop off the network if the upstream network connection fails. This has been an issue which has plagued HP blade networking since the beginning and was a particular problem with the G6 Broadcom Nics so its disconcerting to see similar issues resurface.

http://h20566.www2.hp.com/portal/site/hpsc/template.PAGE/public/kb/docDisplay/?javax.portlet.endCacheTok=com.vignette.cachetoken&javax.portlet.prp_ba847bafb2a2d782fcbb0710b053ce01=wsrp-navigationalState%3DdocId%253Demr_na-c03600027-2%257CdocLocale%253D%257CcalledBy%253D&javax.portlet.begCacheTok=com.vignette.cachetoken&javax.portlet.tpst=ba847bafb2a2d782fcbb0710b053ce01&ac.admitted=1369727818792.876444892.199480143

4.1.450.7 is older firmware however and my post HP Virtual Connect Flex-10 & VMware ESX(i) pre-requisites recommends Emulex firmware version 4.2.401.605 so if you haven’t updated, now is the time.

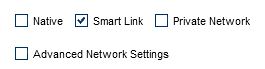

HP blade servers have hard wired connections to their immediate upstream Virtual Connect switch via the chassis backplane. This means in normal operation the Nic on the blade will never see a down state unless the physical Virtual Connect switch is powered off or removed. This would still be the case even if the upstream network connection failed if it wasn’t for DCC. DCC is a firmware component where Virtual Connect sees all uplinks for an Ethernet Network are down and then sends a command down to the individual Flex Nics to tell them to mark the port as down so the blade can fail over traffic to another nic in a port group or team. DCC is what SmartLink does and requires coordination between Virtual Connect firmware and the blade Nic firmware which in the past has caused a lot of issues.

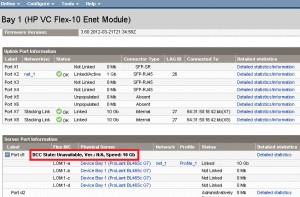

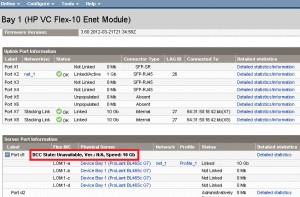

Something I noticed in the advisory however was you can actually now see whether DCC/SmartLink is working for each individual network connection. Navigate to the Interconnect Bays in the VCM GUI, then navigate to the server ports and view the DCC state:

{Source: HP}

This looks like a recent addition to Virtual Connect firmware and will certainly help troubleshooting network connection issues but hopefully the DCC disconnect debacle won’t be repeated.

Sure, flash is revolutionising array storage but its going to take time to replace spinning rust with flash and again it often comes down to cost. Purchasing an all flash array or even just a shelf of flash for your existing array is expensive and a large incremental jump when perhaps you just need some more oomph during your month end job runs.

Sure, flash is revolutionising array storage but its going to take time to replace spinning rust with flash and again it often comes down to cost. Purchasing an all flash array or even just a shelf of flash for your existing array is expensive and a large incremental jump when perhaps you just need some more oomph during your month end job runs.![]()

This is great but I think SDDC is just a stepping stone to what we are really trying to achieve which is the “Policy Defined Data Center”.

This is great but I think SDDC is just a stepping stone to what we are really trying to achieve which is the “Policy Defined Data Center”.

Recent Comments