VMware has finally officially announced what is to be included in vSphere 6.0 after lifting the lid on parts of the update during VMworld 2014 keynotes and sessions.

See my introductory post: What’s New in vSphere 6.0: Finally Announced (about time!) for all details of all the components.

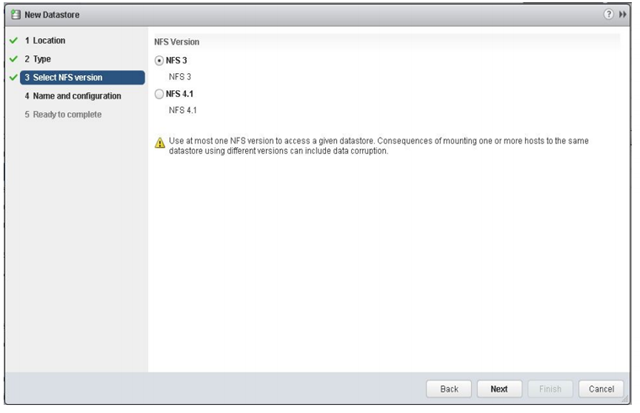

NFS has been available as a storage protocol since 2006 with ESX 3.0 and vSphere has been using NFS version 3 for all this time. There’s been no update to how NFS works.

I’ve been a massive fan of NFS since it was released. No LUNs, much bigger datastores and far simpler management. Being able to move around, back up and restore VM disk files natively from the storage array is extremely powerful. NFS datastores are by default thin-provisioned which allows you your VM admin and storage admin to agree on actual storage space utilisation.

However, good old NFSv3 has a number of limitations, there is no multi-pathing support, limited security and performance is limited by the single server head.

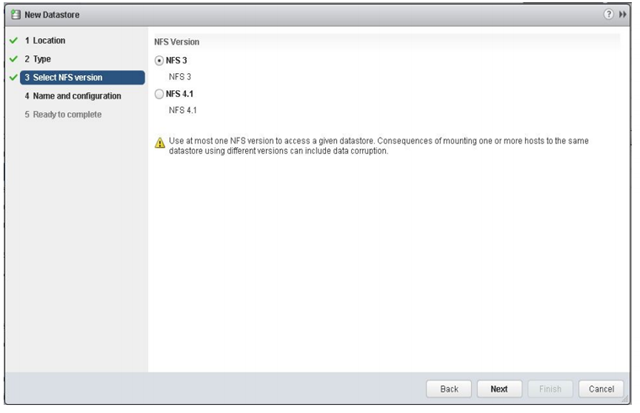

vSphere 6.0 introduces NFS version v4.1 to solve many of these limitations.

NFS 4.1 introduces multi-pathing by supporting session trunking using multiple remote IPs to a single session. Not all vendors will support this so best to check. You can now have increased performance from load-balanced and parallel access, with it comes better availability from path failover.

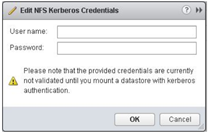

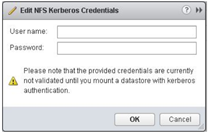

There is improved security using Kerberos authentication. You need to add your ESXi hosts to AD and specify a Kerberos user before creating any NFSv4.1 datastores with Kerberos enabled . You then use this Kerberos username and password to authenticate against the NFS mount. All files stored in all Kerberos enabled datastore will be accessed using this single user’s credentials. You should always use the same user on all hosts otherwise vMotion and other features might fail if two hosts use different Kerberos users. NTP is also a requirement as usual when using Kerberos. This configuration can be automated with Host Profiles.

There is improved security using Kerberos authentication. You need to add your ESXi hosts to AD and specify a Kerberos user before creating any NFSv4.1 datastores with Kerberos enabled . You then use this Kerberos username and password to authenticate against the NFS mount. All files stored in all Kerberos enabled datastore will be accessed using this single user’s credentials. You should always use the same user on all hosts otherwise vMotion and other features might fail if two hosts use different Kerberos users. NTP is also a requirement as usual when using Kerberos. This configuration can be automated with Host Profiles.

NFSv4.1 now allows you to use a non-root user to access files. RPC header authentication has also been added to boost security, it only supports DES-CBC-MD5 which is universal rather than the stronger AES-HMAC which is not supported by all vendors. Locking has been improved with in-band mandatory locks using share reservations as a locking mechanism. There is also better error recovery.

There are some caveats however with using NFS v4.1. NFSv4.1 is not compatible with SDRS, SIOC, SRM and VVols but you can continue to use NFSv3 datastores for these.

NFSv3 locking is not compatible with NFSv4.1. You must not mount an NFS share as NFSv3 on one ESXi host and mount the same share as NFSv4.1 on another host, best to configure your array to use one NFS protocol, either NFS v3 or v4.1, but not both.

The protocol has also been made more efficient by being less chatty by compounding operations, removing the file lock heartbeat and session lease.

All paths down handling is now different with multi-pathing support. The clock skew issue that caused an all path down issue in vSphere 5.1 and 5.5 has been fixed in vSphere 6.0 for both NFSv3 and NFSv4.1. With multi-pathing, IO can failover to other paths if one path goes down, there is no longer any single point of failure.

No support for pNFS will be available for ESXi 6.0. This has caused some confusion, best to have a look at Hans de Leenheer’s post: VSPHERE 6 NFS4.1 DOES NOT INCLUDE PARALLEL STRIPING!

Very happy to see NFSv4.1 see the light of day with vSphere for at least the multi-pathing as this caused many people to go down the block protocol route with the added complexity of LUNs, however, its a pity NFSv4.1 is not supported with VVols. I’m sure VMware must be working on this.

Gigamon is an established vendor which provides network traffic visibility. In its simplest form it is a large network tap. You chose what traffic you want to inspect more closely and run it through Gigamon’s devices. Gigamon then can hand off to other vendor products to then analyse the data. It could be security scanning with an intrusion detection system or watching traffic for data loss prevention or seeing if you have a bot net running internally.

Gigamon is an established vendor which provides network traffic visibility. In its simplest form it is a large network tap. You chose what traffic you want to inspect more closely and run it through Gigamon’s devices. Gigamon then can hand off to other vendor products to then analyse the data. It could be security scanning with an intrusion detection system or watching traffic for data loss prevention or seeing if you have a bot net running internally.

![image_thumb[8] image_thumb[8]](http://www.wooditwork.com/wp-content/uploads/2014/08/image_thumb8_thumb.png)

Recent Comments