What’s New in vSphere 6.0: Content Library

VMware has finally officially announced what is to be included in vSphere 6.0 after lifting the lid on parts of the update during VMworld 2014 keynotes and sessions.

See my introductory post: What’s New in vSphere 6.0: Finally Announced (about time!) for details of all the components.

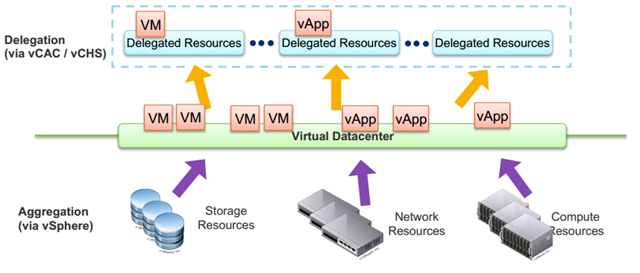

Content Library is a planned new addition to vSphere 6.0 which was talked about for the first time in a session at VMworld. Content Library is a way to centrally store VM templates, vApps, ISO images and scripts.

This content can can be synchronised across sites and vCenters. Synchronised content allows you to more easily deploy workloads at scale that are consistent. Having consistent content is easier to automate against, will be easier to keep things in compliance and make an admin’s life more efficient.

Content Library provides basic versioning of files in this release and has a publish and subscribe mechanism to replicate content between local and remote VCs which by default is synchronised every night. Changes to descriptions, tags and other metadata will not trigger a version change. There is no de-dupe at the content library level but storage arrays may do that behind the scenes.

Content Library provides basic versioning of files in this release and has a publish and subscribe mechanism to replicate content between local and remote VCs which by default is synchronised every night. Changes to descriptions, tags and other metadata will not trigger a version change. There is no de-dupe at the content library level but storage arrays may do that behind the scenes.

Content library can also sync between vCenter and vCloud Director.

The content itself is stored either in vSphere Datastores or actually preferably on a local vCenter file system since the contents are then stored in a compressed format. A local file system is presented directly to the vCenter Servers, for a Windows VC it can be another drive or folder added but for the vCenter Appliance the preferred approach is to mount a NFS share directly to your vCenter appliance. This may mean you need to amend your storage networking as many installations have segregated storage networks which are directly accessible by hosts to store VMs but not by vCenter.

Recent Comments