Adding some more colour to the highlights from my VMworld Europe 2015 coverage:

Richard McDougall, a Principal Engineer at VMware led this presentation peeking into the future.

This session was about the futures & trends for storage hardware and next-gen distributed services. shared NVMe/PCIe rack scale, flash densities & if magnetic storage still has a place.

Richard gave an interesting talk explaining the needs of Big Data/No SQL etc. applications and their storage requirements building up a graph using two axis, horizontal for size from 10s of TBs to 10s of PBs and vertical for IOPS from 1000 to 1,000,000.

Richard gave an interesting talk explaining the needs of Big Data/No SQL etc. applications and their storage requirements building up a graph using two axis, horizontal for size from 10s of TBs to 10s of PBs and vertical for IOPS from 1000 to 1,000,000.

He built up the picture showing where various memory and storage applications sit and then added what hardware / software platforms are used to service these applications, it was a great visual aid.

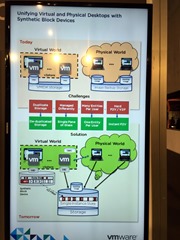

He spend time going through how cloud native applications and containers still have a storage requirement with some options copying the whole root tree, using a Docker solution by cloning using another union file system (aufs), like redo logs for VMDKs.

Containers still need files, not blocks and need snapshots and clones. You need non-persistent boot environment as well as somewhere to put persistent data. Shared volumes may be needed as well as an object store for retention/archive.

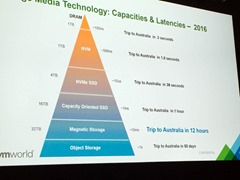

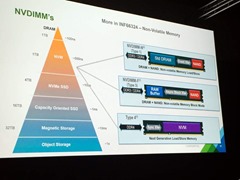

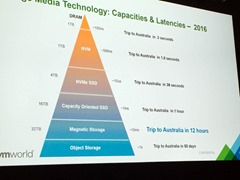

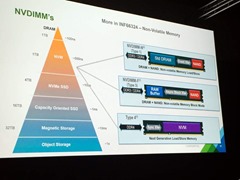

Richard went on to talk about hardware and the massive increase in performance for NVDIMMs, getting closer to DRAM. Have a look at the comparison chart relative for travel time from California to Australia.

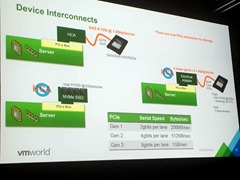

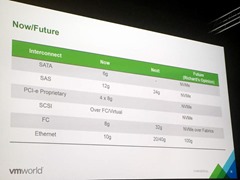

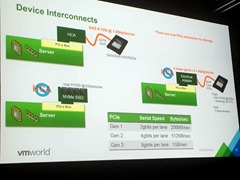

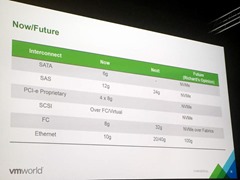

He then went through some of the device interconnects and posited that NVMe will take over most current interconnect methods, he was very positive about NVMe!

He mentioned how hard it is to actually build true scale out performant storage.

He mentioned a great use case for caching companies like PernixData and how they in the future could be used to front end things like S3 storage, so have massive buckets in the cloud yet give very fast locally cached access, interesting.

He mentioned a great use case for caching companies like PernixData and how they in the future could be used to front end things like S3 storage, so have massive buckets in the cloud yet give very fast locally cached access, interesting.

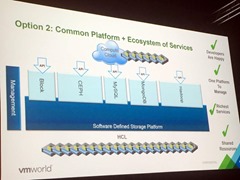

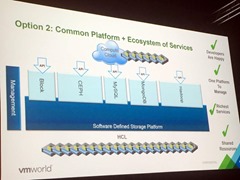

The dream is a single common storage platform that can be used with a single HCL and common software defined storage platform for Block, CEPH, MySQL, MongoDB, Hadoop etc. I think that’s what VMware is trying to make VSAN do.

This is very difficult to achieve but I certainly see future VSAN not too far away with native SMB and NFS access as well as persistent storage for containers running on the Photon Platform. This will give you the best of both worlds, stateless containers running natively as well as stateful containers with their data stored locally within the container being replicated to other nodes in the VSAN cluster as they are VMs. Other services can access SMB and NFS for file data natively on VSAN which will also be replicated across the cluster and across sites for DR.

Nimble Storage is a storage company with lofty goals of “giving users the fastest, most reliable access to data – on-premise and in the cloud”. The premise is their wording, certainly not mine, really should be premises, Nimble!

Nimble Storage is a storage company with lofty goals of “giving users the fastest, most reliable access to data – on-premise and in the cloud”. The premise is their wording, certainly not mine, really should be premises, Nimble!

As Enterprises integrate DevOps into more of their development lifecycles they start to bump up against some of the practicalities of managing data. A major tenet of DevOps is being able to ship code quicker to give you that edge against your competitors. It may be fast to write code and a continuous integration pipeline and continuous deployment capability allows you to take that new code, test it and push it out to production in an automated and repeatable fashion.

As Enterprises integrate DevOps into more of their development lifecycles they start to bump up against some of the practicalities of managing data. A major tenet of DevOps is being able to ship code quicker to give you that edge against your competitors. It may be fast to write code and a continuous integration pipeline and continuous deployment capability allows you to take that new code, test it and push it out to production in an automated and repeatable fashion.

![VMTurboLogoSm[4] VMTurboLogoSm[4]](http://www.wooditwork.com/wp-content/uploads/2015/01/VMTurboLogoSm4.png)

Recent Comments