VMware has finally officially announced what is to be included in vSphere 6.0 after lifting the lid on parts of the update during VMworld 2014 keynotes and sessions.

See my introductory post: What’s New in vSphere 6.0: Finally Announced (about time!) for details of all the components.

UPDATE: 02/02/2015 Virtual Data Center and a Policy Based Management component which were both talked about at VMworld have been pulled from the final release. It seems VMware needs more time to work out which policy and automation functionality goes into vRealize Automation Center, vCloud Director and vCenter itself. It’s a shame really as these components were real enablers for the SDDC, being able to control placement of VMs by policy will have to wait until another day.

Briefly shown in the VMworld Day 2 keynote demos was deploying a VM to a Virtual Datacenter which is in fact planned as a new addition to vSphere. Well, I say new addition which is true but its an old name brought back to life. The whole message of vSphere 4.0 was about creating a “Virtual Datacenter”. You could move physical machines into your virtual datacenter! Now we’ve progressed full circle and “Virtual Data Centers” are back!

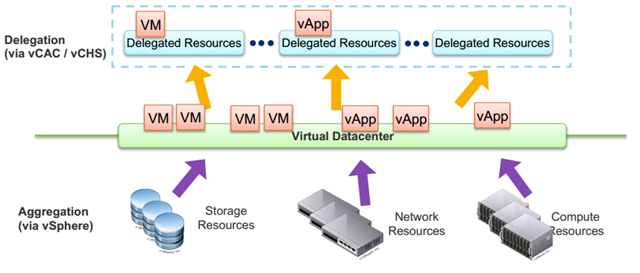

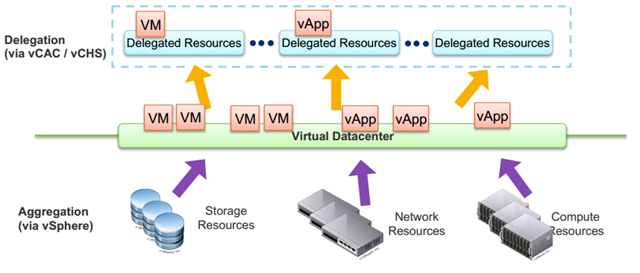

In vSphere 6.0, a Virtual Datacenter aggregates compute clusters, storage clusters, network and policies. In this first release, a virtual datacenter can aggregate resources across multiple clusters within a single vCenter Server into a single large pool of capacity. This will benefit large deployments such as VDI where you have multiple clusters with similar network and storage connections and now you can group them together.

In vSphere 6.0, a Virtual Datacenter aggregates compute clusters, storage clusters, network and policies. In this first release, a virtual datacenter can aggregate resources across multiple clusters within a single vCenter Server into a single large pool of capacity. This will benefit large deployments such as VDI where you have multiple clusters with similar network and storage connections and now you can group them together.

Within this single pool of capacity, the Virtual Data Center will automate VM initial placement by deciding in which cluster the VM should be placed based on capacity and capability.

You can then create VM placement and storage policies and associate these policies with specific clusters or hosts as well as the datastores they are connected to. This policy may be a policy to store SQL VMs on a subset of hosts within a particular cluster for licensing reasons. You can then monitor adherence to these policies and automatically remediate any issues. When you deploy a VM, you would select from various policies and the Virtual Datacenter, based on the policies would decide where a VM would be placed. This again is to try reduce the opex admin decisions of where VMs are placed.

Virtual Data Centers require clusters with DRS enabled to handle the initial placement, individual hosts cannot be added. You can remove a host from a cluster within a Virtual Data Center by putting it in maintenance mode, all VMs will stay within the VDC moving to other hosts in the cluster. If you need to remove a cluster or turn off DRS for any reason and can’t use Partially Automated Mode, you would remove the cluster from the Virtual Data Center. The VMs would stay in the cluster but will no longer have VM placement policy monitoring checks done until the cluster rejoins a Virtual Data Center. You could manually vMotion VMs to other clusters within the VDC before removing a cluster.

Virtual Data Centers require clusters with DRS enabled to handle the initial placement, individual hosts cannot be added. You can remove a host from a cluster within a Virtual Data Center by putting it in maintenance mode, all VMs will stay within the VDC moving to other hosts in the cluster. If you need to remove a cluster or turn off DRS for any reason and can’t use Partially Automated Mode, you would remove the cluster from the Virtual Data Center. The VMs would stay in the cluster but will no longer have VM placement policy monitoring checks done until the cluster rejoins a Virtual Data Center. You could manually vMotion VMs to other clusters within the VDC before removing a cluster.

Read more…

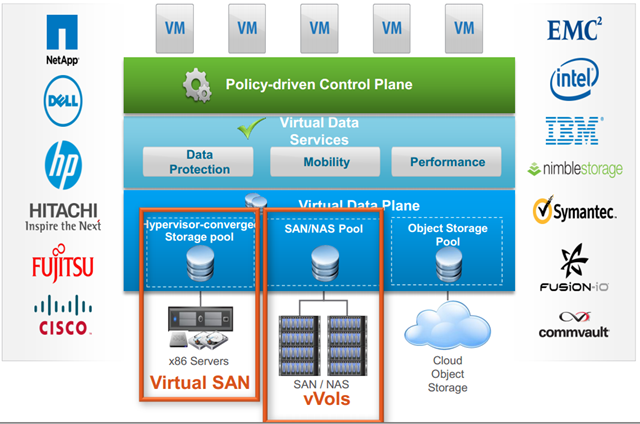

This is great but I think SDDC is just a stepping stone to what we are really trying to achieve which is the “Policy Defined Data Center”.

This is great but I think SDDC is just a stepping stone to what we are really trying to achieve which is the “Policy Defined Data Center”.

Recent Comments