Why vCenter is letting VMware’s side down

I’ve been meaning to write this post for ages and its been gnawing at my brain for months begging to be written so grab a big cuppa and settle down for a long one!

vCenter in my opinion is now the major weakness in VMware’s software lineup. Unfortunately it is that big fat single point of failure that just doesn’t cut it any more in terms of availability.

Lets think back to when VirtualCenter as it was then called was unleashed on the world in 2003.

At the time it was the wonder application that connected your ESX servers together allowing the game changer that was VMotion. You could easily provision VMs from templates, monitor your hosts and VMs and generate alerts. The VMware SDK was what allowed the building of PowerCLI, one of the best powershell examples out there. The VMware management layer was born.

Since then Virtual Center became vCenter and until probably some time last year this was all good. It was a great single pane of glass to look at and manage your virtual environment, hosts, clusters, resource groups, DRS, vMotion, HA etc.

It didn’t need to be highly available. If vCenter went down vMotion and DRS would be affected and you wouldn’t be able to provision new VMs but your underlying VMs running on the hosts would not be affected. HA was configured in vCenter but the information was held on the hosts so even if vCenter failed HA would still be able to recover VMs in the event of a host failure.

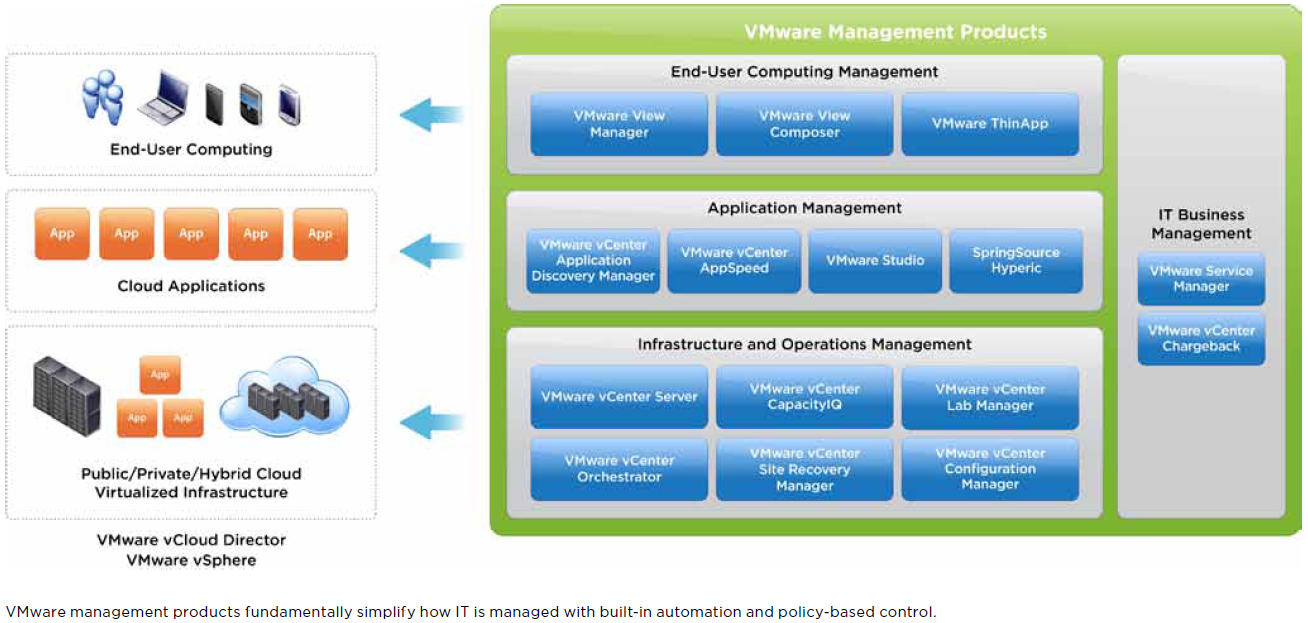

Now the situation is very different, there are more and more VMware management products that rely on vCenter. Have a look at the VMware Management Products picture in the VMware Virtualization and Cloud Management solutions overview.

That’s a lot of applications that now rely on vCenter and this doesn’t even cover everything.

You may have a number of custom scheduled PowerCLI scripts developed to pull out reporting information such as the one mentioned in my previous post.

Although these may not be critical if vCenter isn’t available it does highlight just how many more things are now hooking into vCenter.

The biggest hook-in however is VDI. Using a broker like VMware View and even more so Citrix XenDesktop (I’ll be posting more on this soon) relies on vCenter in a way that it was never designed for. Having your VMs up and running on the hypervisor used to be enough availablity but now with VDI the broker needs to talk to vCenter to deliver VMs to users. If vCenter is not available people cannot work and that’s not good for anyone’s business.

Scale is also something that is now haunting vCenter. VMware has obviously done a lot of work scaling up vCenter to cope primarily with the explosion of VDI.

Look at the vCenter maximums for vSphere 4.1

| Hosts per vCenter Server | 1000 |

| Powered on virtual machines per vCenter Server | 10000 |

| Registered virtual machines per vCenter Server | 15000 |

| Linked vCenter Servers | 10 |

| Hosts in linked vCenter Servers | 3000 |

| Powered on virtual machine in linked vCenter Servers | 30000 |

| Registered virtual machine in linked vCenter Servers 50000 | 50000 |

vCenter is getting seriously big, you can have up to 10000 powered on VMs per instance and 30000 across linked mode groups.

vCenter was never designed and written from the beginning to be highly available. The underlying code is still written with a remote management application in mind rather than a critical part of your infrastructure.

There are just too many issues within the vCenter application that can cause the service to fail. You can get erroneous entries in the vCenter database which can cause a service crash. These can be entries related to storage, networking or even VMs. I’ve had unfortunately too many situations when vCenter has failed. One was due to an erroneous storage entry in the database. When I attempted to power on a VM on this datastore the vCenter service would crash! The entries were put into the database by vCenter itself so why can’t vCenter have better error detection to avoid these outages?

Here’s a look at just some of the VMware KB articles which mention the service crashing due to database issues or failed upgrade issues related to the database.

http://kb.vmware.com/kb/1026319

http://kb.vmware.com/kb/1020317

http://kb.vmware.com/kb/1026009

http://kb.vmware.com/kb/1026688

Linked mode is a great addition for having that even bigger single pane of glass to see all your vCenter servers when you have multiple instances but as licenses and roles are shared across vCenter instances Troublsehooting linked mode issues can cause you to lose all permissions and licenses across all linked mode members as detailed in my previous post. Ouch!

vCenter is also just too big for its own boots. If you’ve ever had to restore the SQL database or recover from a storage snapshot and had to copy out the disk files you will know how much disk space you require for even a moderately sized vCenter. As vCenter uses a single database for all configuration, inventory, event and performance data this can grow very quickly.

The SQL database isn’t also particularly enterprise friendly. The install requires db_owner access to the MSDB database to be able to create the SQL tasks that prune the performance data. Very few enterprise DBAs allow this kind of access to their tightly controlled and pristine SQL corporate environment which is probably already clustered and highly available so you land up having to have a dedicated SQL instance just for vCenter.

So what options do you have to make vCenter more available?

Making vCenter a VM is the best starting point. This has been a contentious issue for a while now but for me it has been decided and the benefits far outway any percieved issues. You can immediately take advantage of some of the things that made you want to virtualise your servers in the first place, providing a starting level of availability using VMotion, DRS and HA and also snapshots.

If you are going to get big then separate your vCenter and SQL instances to two separate VMs (if you are not already using a corporate remote SQL installation). Unfortunately your vCenter and SQL servers probably need more than a single CPU so FT won’t help you though.

As it’s now a VM you can also provide easy business recovery by using storage mirroring to a remote site as shown in this previous post. This is not quite the same thing as local high availability but does provide a certain level of protection that you can bring up vCenter in a remote site.

Dedicate a datastore to your vCenter and SQL Servers so other VMs can’t use their disk space.

If you need more availability then you need additional software.

VMware’s product to address these availability concerns with vCenter is VMware vCenter Server Heartbeat which is a VMware branded OEM version of Neverfail. Heartbeat works by using a duplicate copy of the vCenter and SQL Servers which then uses Neverfail’s replication technology to keep both sides synchronised. These can either be physical, virtual or one of each.

Heartbeat installs a network packet filter to allow only one side of the pair to be visible on the network. If the primary server fails, the secondary one comes up on the network and you can connect again to vCenter. You can run Heartbeat in a LAN or WAN configuration. The WAN configuration differs in that you can have your two servers in the pair in different sites with different IP addresses. When you fail over to the secondary, Heartbeat updates DNS with the new IP address and as long as your DNS TTL is short enough clients can connect again fairly quickly. This could solve local high availability and business recovery in one step. Unfortunately the WAN configuration doesn’t seem to work as well as advertised. Through personal experience and feedback from other people who were at VMWorld, I’ve heard plenty of people were discussing the issues and the general consense is to use Heartbeat only in LAN mode.

Mike Laverick has done a great in-depth Heartbeat overview and installation guide.

http://www.rtfm-ed.co.uk/2010/09/17/a-guide-to-vcenter-heartbeat-server/

So, that’s a step in the right direction but unfortunately Heartbeat still has some problems with its implementation:

- You need to fail over between the sides regularly to keep the machine accounts in sync otherwise the secondary will fall off the network.

- When you patch vCenter you need to fail over to the secondary to reapply patches again.

- Upgrading vCenter is now more complicated as you need to upgrade both sides separately.

- Not all data is synced between the servers so log files, custom scripts and anything else stored on the servers is not synchronised.

- Running linked mode and Heartbeat adds another whole level of complication when you need to troubleshoot linked mode.

Taking a step back and looking at service availability as a whole, using a clustering / replication technology like Neverfail or even Microsoft Cluster (if it was supported) is not the right solution to the problem.

For vCenter to be truly available it has to stop being a great big fat single single point of failure despite you having thrown HA and Heartbeat at it.

Your truly critical infrastructure services don’t have to rely on fix-it clustering technology to stay up and running. Active Directory, WINS, DNS is the best example of self replicating distributed architecture. No single point of failure. Even your other infrastructure type apps fair better. Many modern AV, monitoring, backup and batch scheduling applications don’t crumble when a single point fails.

Citrix has from its beginning designed and built pretty much all of its business critical applications in a truly no single point of failure fashion. Even when you have SQL dependencies in your Metaframe / XenApp / XenDesktop farms you have a grace period of in some occassions up to 96 hours before service would be affected. During this time you may not be able to fully manage your whole environment but clients will not be affected. XenServer as a hypervisor certainly isn’t as functionally rich as ESX and vCenter but doesn’t have a single point of failure. Citrix has for many years seen the benefit of a scalable distributed architecture relying on no single point, its time for VMware to play catch-up.

As vCenter is now so critical and requires near constant availability, maybe it needs to be cut down to size to be a lean and mean client serving machine.

The core functions of ESX(i) hosts talking to each other and providing storage and network connectivity, HA, FT, DRS, vMotion and allowing connectivity for VDI brokers should be built as a highly available critical function with no single point of failure. ESX 4 already has a hidden management VM running as the management agent. This is where these functions should reside, and each host in a cluster and even between clusters should be able to synchronise themselves without having to rely on vCenter, I suppose in a similar way to HA . VDI brokers should be able to cache a list of all available hosts from vCenter and then be able to talk directly to any host in a cluster and be able to power on/off a VM and allow VDI connections, in fact similar to how XenServer works.

The rest of the non-core functionality can then be held in a now non-critical vCenter database which handles the gathering of stats, events, tasks etc, distinctly separate from the core availability functions but still providing that simple single pain of glass to view your environment.

If this isn’t the way to go then a federated distributed architecture where there are multiple connection points and information is replicated across vCenters and your client can connect to any instance is the way to go.

Maybe these ideas are not the solution and there are undoubtably other options. I’m just illustrating ideas that drive home the point that vCenter needs to pull its socks up and become a truly enterprise highly available solution.

People who have vCenter issues which cause downtime for their users quickly start looking around for other options and its just the way Citrix and maybe in the future Microsoft (although their current management applications suffer from severe bloatware) come knocking on the door. Your infrastructure is only as good as its weakest link and if you can’t ensure availability at every step along the chain people are going to look at alternatives.

Maybe there are signs that things that are going to change. VMWare vCloud Director has been released which looks as though VMware may be thinking a bit more about availability at least at that level. At the moment vCD still sits on top of the same vCenter so if that’s still your single point of failure your cloud will come crashing down.

VMware, you currently have the world best hypervisor so don’t let your side down by having a sub standard vCenter.

Hope you enjoyed that cuppa!

One of the best articles I’ve read in a while. Well done, Julian. Two takeaways from me: both VDI and Cloud depend on vCenter, so this is critical to get right but is usually and wrongly a low priority.

Julian – a great article. Personally, I’m happy to see the direction VMware is starting to take. The architectural model (and the FLEX UI model) of vCloud director represents “directionally” where VMware is going. They’ve dropped tidbits on this front publicly – and for what it’s worth, I know they are working furiously on it.

I’d argue that vCenter is the best example of centralized management for a virtualized datacenter today – but that isn’t good enough (which I think is your point). I’m positive with the direction, though…

Great article Julian, you hit the nail on the head! I recently posed many of these concerns in a internal engineer debate but you really covered every angle. Lets hope VMware can get this right!