Planning for and testing against failure, big and small

After my previous post about vCenter availability I thought I should expand on some other factors related to availability and what you should be thinking about to protect your business against failure.

Too often IT solutions are put in place without properly considering what could go wrong and then people get suprised when they do. Sometimes the smallest things can make the biggest difference and you can land up with your business not operating because some very small technical glitch that could have been avoided brings everything down.

Planning for failure should be a major part of any IT project and the cliche is certainly true, “If you fail to plan you plan to fail.” Planning for failure includes big things like a full site disaster recovery plan but also includes small things like ensuring all your infrastructure components are redundent and you don’t have any single points of failure.

The Big Things:

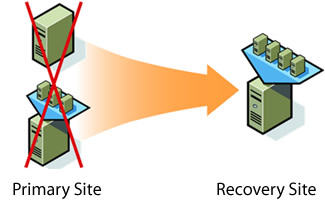

The big decisions are often worked out at a high level such as whether you need a separate business recovery site to recover from a building failure. Can you provide business recovery between two existing offices in separate cities, countries or continents?

Generally you plan for a primary site failure and ensure you can bring up critical business systems to allow maybe a reduced number of your primary site staff to work in another location. This means you don’t necessarily need as much equipment in your recovery site as your primary site and you’ve hopefully worked out you need x number of people from each department in order to keep your business running and you would have catered for this.

Virtual environments provide some great solutions to achieve this by being able to have VMs at your recovery site running on even different hardware from your primary site and powered up when required. You can also mirror VM storage from one site to another and have your primary site VM come up exactly as it was (with maybe an IP change) in your recovery site. Business recovery became a lot easier once you starting using VMs.

Virtual environments provide some great solutions to achieve this by being able to have VMs at your recovery site running on even different hardware from your primary site and powered up when required. You can also mirror VM storage from one site to another and have your primary site VM come up exactly as it was (with maybe an IP change) in your recovery site. Business recovery became a lot easier once you starting using VMs.

As VMs are all singing all dancing solutions to everything and you’ve now made everything possible a VM, you have started moving even more of your infrastructure into your datacenter. This means you have more to recover, and its now not just servers.

Look at VDI as an example. One of the great use cases for VDI is remote desktops. You may well be using published applications and published desktops of the Citrix/terminal server kind but have a need to have actual desktops in a remote site where a terminal server can’t deliver what you need. Say you have a whole bunch of developers working in a remote site who need to develop against a database in your main site. With VMs its very easy to create desktops and have your developers connect remotely and work “locally” to your database data. They may just use RDP to connect or a “real” VDI solution with a broker in between.

What happens now when your primary site fails? Say it is a fire or a hurricane and your primary site is no longer available. Your staff can’t get into your primary site anyway but you catered for a reduced number of staff working in your recovery site so you are covered. But what about your developers? They are still coming into work in their remote office and are not affected locally by the disaster but now can’t work as their VMs are not available. You have catered for a cut down environment at your recovery site for local staff but what about your remote desktops? Now, with VDI, a site failure is far more serious affecting potentially many more people remotely who cannot work. As soon as you have people connecting remotely you need to expand your business recovery environment to cater not only for a reduced number of local staff but possibly all of your remote staff. You don’t want staff in offices across the globe twiddling their thumbs due to a disaster in your main site.

OK, so that’s an example of a big site disaster thing that you need to ensure you are protecting your business from whether people come into the office locally or work remotely.

The Small Things:

What I also want to highlight is the smaller things which covers how you protect yourself from failure within a site, ensuring things stay up and running without having to invoke a full site recovery.

Are you looking at each component of your infrastructure at each level and doing two things?

- Do you understand what you are and are not protected against?

- Have you tested all the little bits and pieces fully?

You probably have many systems in place that you have designed to be made available in a local site. You may be using HA for your VMs, have clustered storage, redundent switches, secondary domain controllers, a web farm etc. but have you really considered what your protection doesn’t give you?

Say for example you are using VMware vCenter Server Heartbeat to protect your vCenter server. What does this technology actually protect you against?

As you have two separate OS instances, if you have an OS corruption you should be able to fail over and recover. If you install some software or a Microsoft patch and you haven’t updated the other half you should be able to fail over and be OK. However, if you have an erroneous database entry which unfortunately does happens, you are NOT protected. That glitch in the database introduced by vCenter is immediately replicated to the other server and your clustering solution hasn’t saved your day. vCenter fails and none of your VDI clients can connect. “But I have Heartbeat installed to protect vCenter!” you tell your manager, but Heartbeat doesn’t protect against a database issue, neither does MS Cluster. FT doesn’t protect you against an OS corruption. HA doesn’t protect you against a firmware bug that shuts down every host due to a faulty temperature reading.

When planning any availability solution you really do need to draw up a failure scenario list working out all the things that could potentially happen and then work out what you would and wouldn’t be protected against. Share this list with everyone involved in the infrastructure so when your availability solution or clustering technology doesn’t protect you from failure you don’t have the powers that be coming down on you like a ton of bricks saying “I thought we were protected against anything and everything failing”.

The job of anyone architecting or designing availability solutions is first of all to plan fully for failure but secondly to understand the limitations of your availability solution and maybe that means you need to change your availability solution.

Testing:

Have you actually tested and documented the testing of each of your components? You have just installed new hosts, new switches, new storage. Have you tested each and every little failure scenario? Are you sure? Have you proved that when a switch uplink fails, network traffic does actually route over the other uplink. Have you fully tested failover of your storage cluster? When a PDU fails in a rack, does your chassis stay powered on? Do your VMs definately stay up and running when your blade network switch dies? Have you vMotioned a VM between every host to check it works as you expect (When buliding hosts, it should always be policy before you put it into production take a test VM and check it can vMotion to and from the new host)?

Even if you have been thorough and gone through as many tests as you could think of, did you write down the results on anything other than a post-it. What happens next time you need to add to your infrastructure? Can you remember what tests you ran? When something does fail and causes an outage how can you be sure what you tested? How can you satisfy your manager that you did everyting you could to protect against component failure when there’s no test sheet?

Even if you have been thorough and gone through as many tests as you could think of, did you write down the results on anything other than a post-it. What happens next time you need to add to your infrastructure? Can you remember what tests you ran? When something does fail and causes an outage how can you be sure what you tested? How can you satisfy your manager that you did everyting you could to protect against component failure when there’s no test sheet?

So, formalise your testing. It doesn’t mean it will take a lot longer but does mean you are providing a structured and documented way that you can prove your design is resilient against failure. Get all your teams together, facilities, networking, storage, VM people. The more people involed in the scenario planning the better your testing will be.

An example:

Say you have deployed a new rack full of blade servers with new switches upstream. You have everything working as expected. What would your test plan look like?

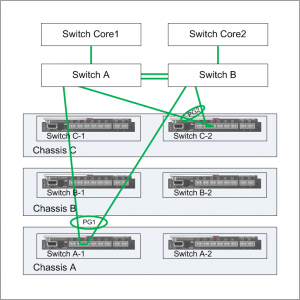

You have 3 blade chassis in a rack with 4 x 10GbE uplinks in 2 pairs coming out of the rack to upstream switches. You need to test against chassis component failure and to test redundent network connectivity.

Your rack networking may look something like this:

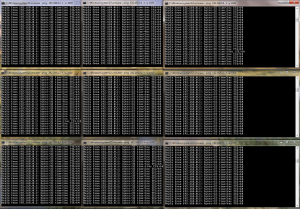

I like to put together an Excel spreedsheet listing all the tests. This helps focus attention on exactly what is tested and forms a testing document as well.

Here’s a sample testing spreedsheet.

For a test like this I would ensure I have an ESX host in each chassis with a test VM running on each host, so you have a VM running in each chassis.

Each ESX host will have a Service Console IP address and a VMKernel IP address.

Set up 9 continuous pings.

- 3 to the actual VM IP addresses

- 3 to the Service Console IP Address of each ESX host.

- 3 to the VMKernel IP address of each host.

Once you have your pings running all visible on the screen you are ready to start your test.

So, what is there to test?

Start at the bottom of your infrastructure and work your way up.

Infrastructure

- You would have dual power sources to your rack, fail each PDU in turn.

Chassis Administration

- Fail each chassis’ administration module in turn

Chassis Internal Networking

- Fail each chassis network switch in turn

- Fail each racks network stack cable

Chassis External Networking

- Remove and replace each network uplink in turn

Upstream Switch Networking

- Fail each switch port group in turn

- Fail switch cross links

- Fail uplinks from switch switch to core network

- Fail each switch in turn

Print out the Excel sheet and mark down the number of pings dropped for each test.

Go through your test sheet methodically and record the scores. If you have failures you will then know where to look and can get the relevant person working on fixing the issue. Update the spreedsheet with the scores and store somewhere you won’t forget. If something is fixed, retest and update the spreedsheet. Next time you deploy a new rack you have your previous test steps and results and know what to expect.

If you do have a failure somewhere down the line, you can clearly show to anyone exactly what tests were run and the scores and therefore be able to troubleshoot the issue more clearly.

It’s all well and good having the big recovery processes planned, but don’t also forget about your component testing.

Do your business a favour and look into the little things to make sure a network uplink going bad doesn’t take your whole environment out.

Recent Comments