VMware has announced its latest update to version 5.5 of its global virtualisation powerhouse, vCloud Suite.

To read the updates for all the suite components, see my post: What’s New in vCloud Suite 5.5: Introduction

Virtual SAN (VSAN) is one of the highlights of the new vCloud Suite 5.5 and is a really strong push further into VMware’s vision of the Software Defined Data Center (SDDC). VSAN was previewed at VMworld 2012 when it was then called VMware Distributed Storage. VSAN is in public beta and won’t be available with the initial release of vSphere 5.5 but with the first update which is scheduled for next year.

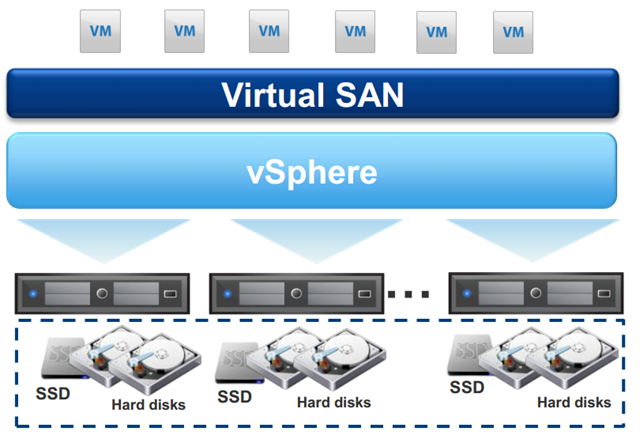

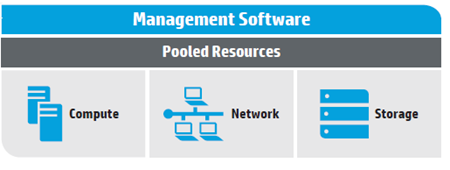

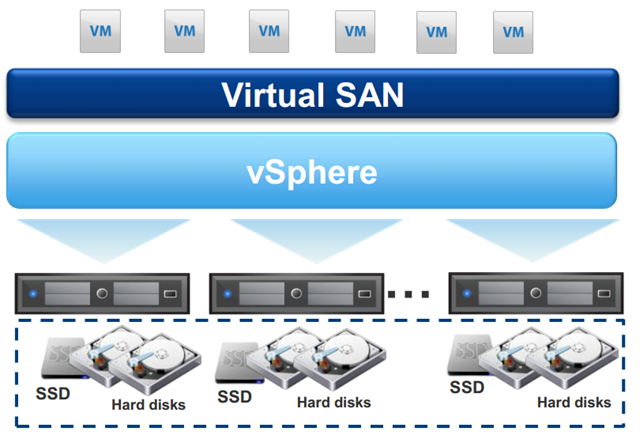

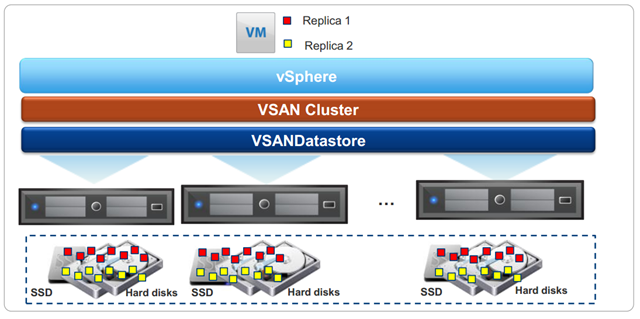

VSAN is a VMware developed software-based storage solution built into the ESXi hypervisor that uses the host’s local disk drives (SSD & HDD) and then aggregates them so they appear as a cluster wide pool of storage shared across all hosts.

It is a highly available scale-out clustered storage solution to host VMs. It bring CPU, memory and storage closer together which is certainly the idea that Nutanix has been successfully running with.

In a simplistic way this is just another Virtual Storage Appliance (VSA) but embedded within the hypervisor rather than as an appliance. However, a VSA in my opinion by itself isn’t a true part of the SDDC in the same way that a firewall that happens to be running as a VM isn’t true software defined networking.

In a simplistic way this is just another Virtual Storage Appliance (VSA) but embedded within the hypervisor rather than as an appliance. However, a VSA in my opinion by itself isn’t a true part of the SDDC in the same way that a firewall that happens to be running as a VM isn’t true software defined networking.

VMware makes the VSAN more software defined than a standard VSA by implementing automated storage management with per-VM policies and per-VM QoS enforcement. That’s a lot to actually digest but I’ll get back to what that actually means.

VSAN is fully integrated with vCenter, managed through the vSphere Web Client and works seamlessly with HA, DRS and vMotion. It is very easy to setup, configure and manage and yet provides enterprise features and performance scaling from terabytes to petabytes.

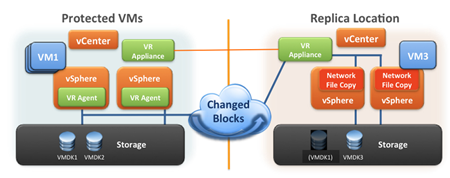

Thin provisioning is available along with support for VM snapshots, cloning, backup and replication using vSphere Replication and Site Recovery Manager.

VMware is listing VSAN use cases as VDI, test/dev, Big Data and DR. I think they are covering themselves by not including production workloads as this is a version 1 release and they want to see how it works in the real world at scale before committing and will also want to mature the functionality in the future. I’d love to see anyone who considers a real VDI deployment not as important as production though!

If you’re wondering, the difference between VSAN and VMware’s own vSphere Storage Appliance (VSA) is VSAN is implemented in the hypervisor, VSA is a virtual appliance presenting a NFS datastore, VSAN can use Flash as a read cache and write buffer, VSA can’t and VSAN has the whole policy-based per-VM management which VSA does not.

Requirements

You need a minimum of 3 vSphere 5.5 ESXi hosts with local storage to create a VSAN. You can scale out to 8 hosts providing storage to the VSAN with the maximum cluster size of 32 hosts being able to consume that storage.

You obviously need vCenter to manage VSAN.

You need at least 1 x HDD and 1 x SSD in each host, SSDs are used as a read cache and write buffer and the HDDs are used as a persistent store. Not all hosts in the cluster have to have local storage, some can just be compute nodes but you need at least 3 with local storage to create a VSAN. They don’t have to have the same drive sizes as long as each host contributing storage has at least 1 x SSD and 1 x HDD. Hosts with no storage can still use the VSAN.

You cannot have ESXi and VSAN using the same disk and in this release VSAN won’t work with auto deploy. This means you will either need another disk or disks for the ESXi boot partition or boot from SAN or use a SD card or USB stick for the ESXi installation.

You need a SAS/SATA RAID Controller that works in pass-thru or HBA mode as the disk needs to be presented as a SCSI device to VSAN. Some PCIe Flash devices are presented as a block device and so won’t work with VSAN. You can use the same RAID controller for the SSD and HDD disks but if your RAID controller is going to be an IO bottleneck you would then need to think about having separate controllers or spreading out the IO.

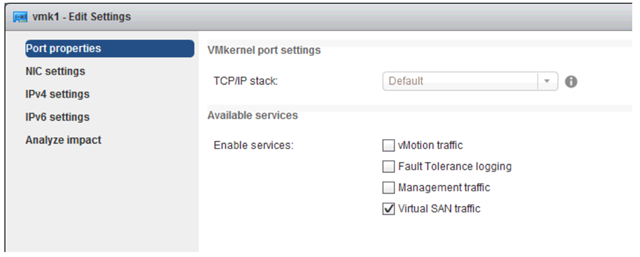

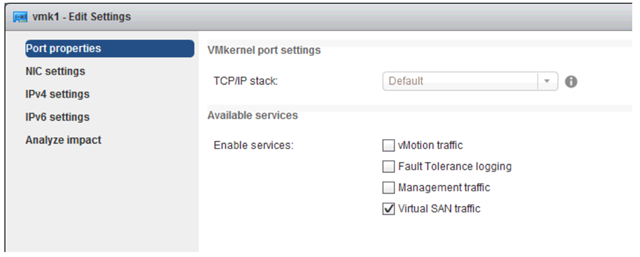

The VSAN uses a VMkernel port to connect the local hosts’ storage together. You can use either 1Gb or 10Gb networking but 10Gb is obviously preferred. You tag a VMkernel port with the Virtual SAN traffic service just like you do with vMotion and FT.

Policies and QoS

VM storage policies is what sets VSAN apart from a standard VSA and really makes it software-defined but this can take a little time to get your head around so bear with me.

To understand how this works you need to separate the underlying VSAN datastore from the VM and put a layer of policy between the two.

When you create a vSAN cluster, you select the local disks to use across your hosts and a vsanDatastore is created automatically. You don’t actually just deploy a VM directly to this vsanDatastore, that wouldn’t be very software-defined would it!

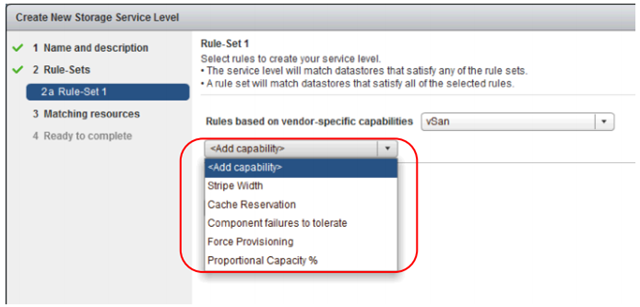

What happens is the vsanDatastore tells the VSAN using the standard VMware vStorage APIs for Storage Awareness (VASA) a number of capabilities it can offer VMs such as how many host or disk failures to tolerate and a way to define how many IOPS a VM requires and how much of a VMs reads should always be kept in SSD cache.

What you then do is create a VM Storage Policy to define how you want your VMs to use these capabilities. The standard explanation for these kinds of things is Gold, Silver and Bronze policies but that isn’t particularly meaningful. You can in fact use different policies for individual VM disk files (called storage objects).

You could create a simple VM Storage Policy called “High Performance VMs” which would say that VM disks based on this policy are stored with at least 6 replicas for higher performance. You could then create another simple policy called “Critical Availability VMs” which would ensure that VM disks based on this policy are stored on at least 3 hosts so even with two host failures your VMs will continue to function. You can also create policies which specify multiple capabilities such as “High Performance Critical Availability VMs” which would ensure there are 6 data replicas for higher performance spread across at least 3 hosts (remember, you can have multiple disks in a host).

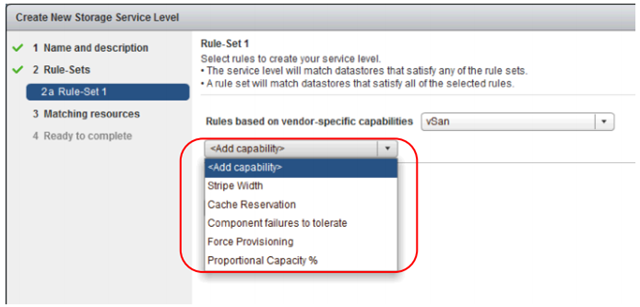

For VSAN, you can create policies based on 5 capabilities:

Stripe Width

The number of physical disks across which each replica of a storage object is distributed up to a max of 12. Having more replicas can give you better performance (throughput and bandwidth) but also results in higher system resource use as multiple copies means more writes.

Component Failures To Tolerate

Defines the number of host, disk or network failures a storage object can tolerate up to a maximum of 3. For “n” failures tolerated, “n+1” copies of the object are created and “2n+1” hosts are required.

Proportional Capacity %

Percentage of the logical size of the storage object that should be reserved (thick provisioned) up to 100%. The rest of the storage object is thin provisioned.

Cache Reservation

Flash capacity reserved as read cache for the storage object which is specified as a percentage of the logical size of the object up to 100%. This is only used for addressing read performance issues. Reserved flash capacity cannot be used by other objects and unreserved Flash is shared fairly between all objects.

Force Provisioning

If this option is enabled, the object will be provisioned even if the policy specified

in the storage service level can’t be satisfied with the resources currently available in the cluster. VSAN will try to bring the object into compliance if and when resources become available. This is disabled by default.

When you deploy a VM you don’t actually select a datastore on which to provision the VM disks but rather assign the VM storage provisioning to one of the policies you have created with possibly separate policies for each VM disk.

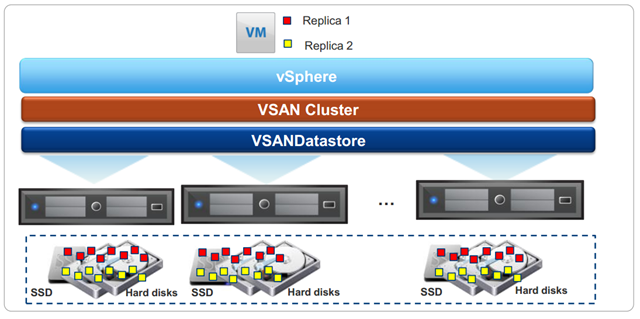

When the VM is deployed, the VM Storage Policy is sent down to the VSAN which then lays out the VMDK across the cluster to satisfy the policy settings. Any VM deployed with your “High Performance Critical Availability VMs” policy is therefore stored based on the policy rules.

With VSAN this means you can create a single cluster wide datastore and enforce different QoS policy levels for each VM or virtual disk. That is pretty powerful stuff. Also if you then decide to change the policy, all VMs or disks based on that policy will have their storage layout amended to comply.

This policy based system isn’t just for VMware VSAN. EMC, NetApp, Dell etc. will have their own set of capabilities their storage arrays can provide which will be sent up through VASA to be used within Storage Policies.

VSAN is pretty exciting as it brings shared storage to everyone without requiring a traditional SAN. What is even more interesting is we can see the power of policy based VM storage provisioning. I can already think of ways this can be extended by having capabilities available for replication based on various RPOs and RTOs.

It will be interesting to see how the recently released PernixData FVP plays in this area as FVP is a transparent storage performance tier and also runs as part of the hypervisor leveraging SSD for high performance and HDD for capacity but has deduplication built in. Can you use FVP on top of VSAN? Interesting times.

Sure, flash is revolutionising array storage but its going to take time to replace spinning rust with flash and again it often comes down to cost. Purchasing an all flash array or even just a shelf of flash for your existing array is expensive and a large incremental jump when perhaps you just need some more oomph during your month end job runs.

Sure, flash is revolutionising array storage but its going to take time to replace spinning rust with flash and again it often comes down to cost. Purchasing an all flash array or even just a shelf of flash for your existing array is expensive and a large incremental jump when perhaps you just need some more oomph during your month end job runs.![]()

Recent Comments